Konstantinos Bitsakos

Ph.D.

|

Center for Automation Research, Computer Science Dep., University of Maryland, College Park |

|

|

| Research | Publications | Code | Personal Interests |

| |

Konstantinos Bitsakos Ph.D. |

|

|||||||||

|

|||||||||||

|

|||||||||||

|

Research

|

|

|

My research interests are in computer vision, image processing and biological vision. |

|

|

|

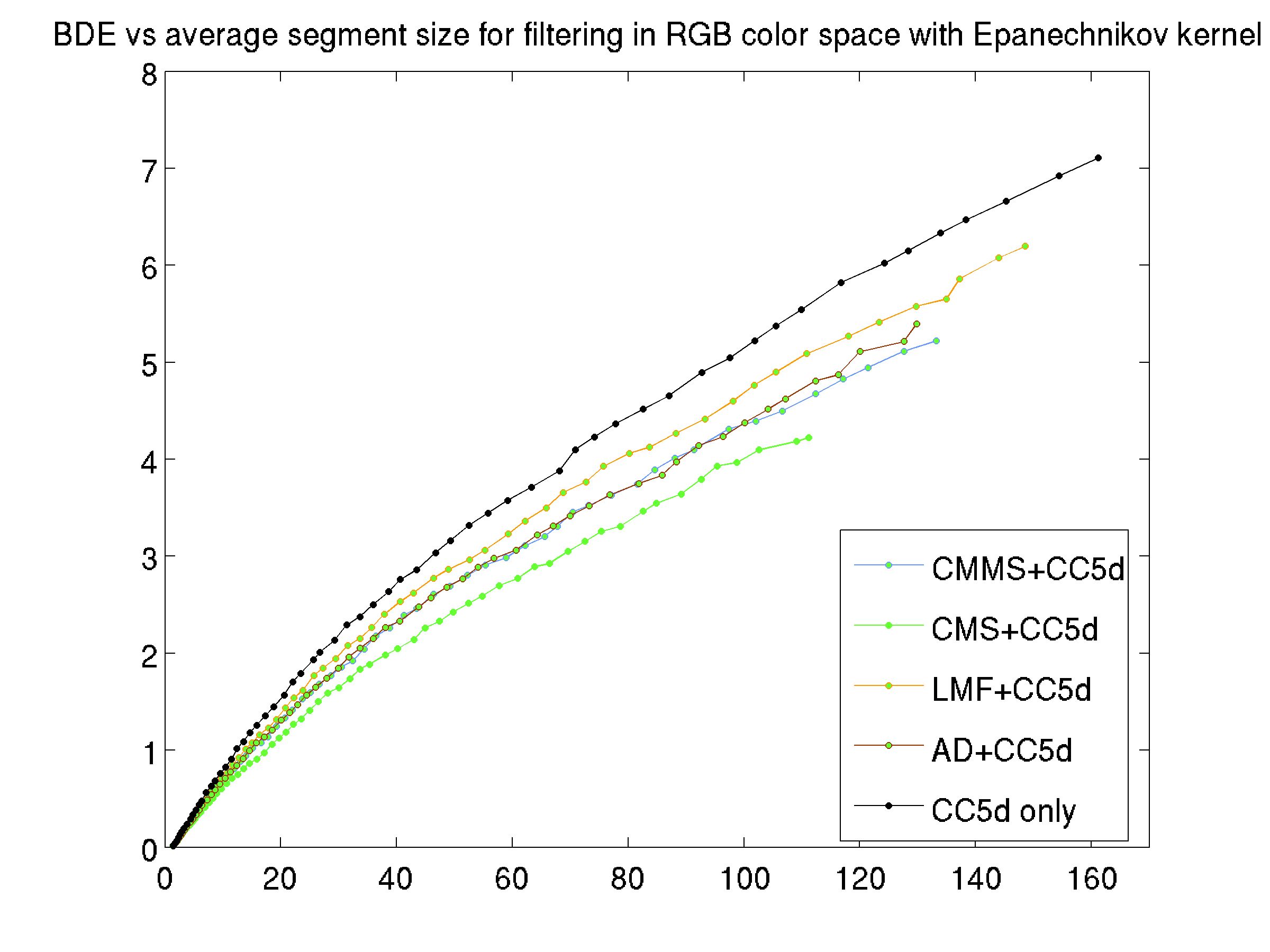

Color

based segmentation is a fundamental low level computer vision process

that is considered the building block for higher level tasks, such as

object recognition. Traditional approaches to segmentation focus only

on the clustering part of the process In our work we deviate from tradition and study the

segmentation as a two step process; an edge preserving image filtering

followed by pixel grouping. In the image filtering step, we study the

mean shift concept and extend it creating three new methods. The most

prominent one, called Color Mean Shift, is experimentaly shown to

outperform the existing mean shift and bilateral filtering methods; it

creates more uniform regions that areseparated by crisper boundaries. Then, we couple the filtering methods with well known

clustering methods and show that the end segmentation result is much

better than using the clustering methods alone. |

|

|

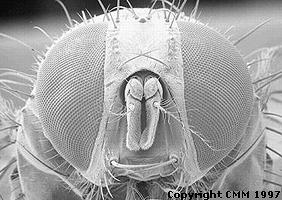

The perspective camera is the dominant model used to create cameras as well as design computer vision algorithms, even though it is not the only possibility that exists. On the contrary, in the biological world, a large variety of eye designs exist. It has been estimated that eyes have evolved no fewer than 40 times, independently, in different parts of the animal kingdom. It is well understood that eyes always embody in their design complicated compromises between different competing physical factors and that specialized eye designs might be better suited for solving specific tasks. In this work we consider the compound eye of flies, where approximately 3000 simple eyes (ommatidia) with 8 photoreceptors each, capture images significantly different from the ones captured with conventional cameras. We are interested in whether this eye design facilitates the estimation of the 3D structure. First, we present the theory on how distance can be estimated and then we present simulation results indicating that distance estimation is possible with good accuracy if the viewed objects are relatively close to the eye. Such a mechanism, if used by the fly, it would be useful in walking conditions. |

|

|

The purpose of this research is to robustly compute the 3D structure of image patches in real time. Assuming a piecewise planar scene, we develop two novel techniques in order to estimate the orientation and distance of the planes from the camera. The first method is based on a new line constraint, which clearly separates the estimation of distance from the estimation of slant. The second method exploits the concepts of phase correlation to compute from the change of image frequencies of a textured plane, distance and slant information. The two different estimates together with structure estimates from classical image motion are combined and integrated over time using an extended Kalman filter. Experiments on indoor environment were performed and the stability and robustness of the method even in the case of mostly textureless corridors is shown. Currently we are extending and testing the method in outdoor environments. This is joint work with Li Yi. |

|

|

This project is inspired by the Semantic Robot Vision Challenge competition. The goal is to bridge the gap between perception and symbolic description of objects. The competition is divided into two phases, the internet search phase and the room exploration/object recognition phase. Initially, a list of objects is provided as a text file and the robot has limited time to search the internet and create representations of those objects. Then, the robot has to navigate autonomously and explore a small room, in which some of the objects in the list along with other objects are scattered. Apparantly this project presents unique challenges in both phases. Our current approach is described in detail in this site. Our goal is to move to more active space exploration and object recognition approaches. It is easy to imagine a scenario, where the object recognition module concludes that an object matches an object in the list with some uncertainty and it "instructs" the navigation module to move closer and capture more detailed images. Or when an object is partially occluded the robot moves to a different area where it is possible to capture images of this object without occlusions. |

|

|

|

Occlusion (or more accurately half-occlusion) areas in multiple images consist of those pixel that are hidden in one (or more) images. The occlusion edges are important cues in human vision and facilitate the segmentation process. In this project we try to estimate occlusions directly from filter responses. We take advantage of the fact that the two parts along an occlusion edge have different displacement values. Our goal is not only to detect the occlusions at an early stage, but also to address the "boundary ownership" problem. |

|

|

|

We analytically develop set of filters that measure the stretch between two image patches. We have performed experiments with synthetically stretched images and found out that we are able to accurately recover the stretch with a single filter even in the case of serious deformations. This is an example of our general philosophy, that it is more preferable to create (by "warping") special filters that measure image properties as opposed to transforming the images and apply standard filters. This is joint work with Justin Domke. |

|

|

Last updated on: February 2011 |

|||