UMD Researchers Lead a Comprehensive Survey on Prompting Techniques

Generative artificial intelligence (GenAI) systems are becoming increasingly prevalent in both industry and research settings. These systems rely heavily on prompting, or prompt engineering, where developers and end users interact with the AI through carefully designed prompts. Despite the widespread use and research in this area, there remains a lack of standardized terminology and a clear ontological understanding of what constitutes a prompt, largely due to the field's growing nature.

In response, University of Maryland undergraduate computer science majors Michael Ilie, Sander Schulhoff, along with Ph.D. student Alexander Hoyle and affiliate computer science Professor Philip Resnik, have led a collaborative, multi-institutional study on prompting techniques for GenAI systems.

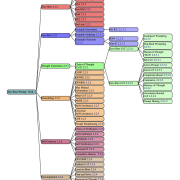

Their paper, "The Prompt Report: A Systematic Survey of Prompting Techniques," addresses these gaps by initially attempting to categorize the diverse and unfamiliar territory of prompting techniques. Their goal is to offer a comprehensive taxonomy and terminology that covers a broad range of existing prompt engineering methods and can accommodate future developments.

“Our paper standardizes methods and terminology in prompt engineering, making it easier for anyone to understand and apply these techniques,” Ilie said. “By providing open-source code for our research, we ensure our work can be validated and reproduced. This foundation enables researchers, developers and even end users to leverage the most advanced and effective techniques, maximizing the potential of generative AI.”

“Our paper standardizes methods and terminology in prompt engineering, making it easier for anyone to understand and apply these techniques,” Ilie said. “By providing open-source code for our research, we ensure our work can be validated and reproduced. This foundation enables researchers, developers and even end users to leverage the most advanced and effective techniques, maximizing the potential of generative AI.”

The researchers discuss over 200 prompting techniques, frameworks built around them and critical issues such as safety and security that need to be considered when using them. The paper introduces a comprehensive vocabulary of 33 terms, a taxonomy of 58 text-only prompting techniques and 40 techniques for other modalities. Additionally, the paper presents a meta-analysis of the entire literature on natural language prefix-prompting.

By bringing together diverse perspectives and expertise, the team produced a study that offers a holistic view. The study, involving researchers from OpenAI, Microsoft and various universities, is the largest of its kind and encompasses thousands of papers on the subject.

"Working with a large team was very beneficial as each person had their unique knowledge of prompting that we included in the paper," Schulhoff said. “It unites the field into a single document containing extensive foundational work. The paper is already making an impact, with previously unclear terminology being elucidated and prompting techniques being standardized."

As GenAI systems continue to evolve, the need for standardized prompting techniques will become increasingly important. The study's findings are expected to significantly impact the field of AI research.

As GenAI systems continue to evolve, the need for standardized prompting techniques will become increasingly important. The study's findings are expected to significantly impact the field of AI research.

“What began as an independent study project that Sander was doing with me turned into an enormous contribution to the current public discussion about large language models,” said Resnik, who holds joint appointments in the University of Maryland's Institute for Advanced Computer Studies and Department of Linguistics. “Right now, designing good prompts for LLMs is alchemy or animal training; people are busily experimenting with ways of getting these systems to produce the results they want, but it's a chaotic process because nobody knows what's actually going on under the hood. The prompt report paper takes a huge step toward creating some order amid the chaos, and it's already having a big impact on the community.”

—Story by Samuel Malede Zewdu, CS Communications

###

Other contributors to the paper include:

Nishant Balepur (University of Maryland)

Konstantine Kahadze (University of Maryland)

Amanda Liu (University of Maryland)

Chenglei Si (Stanford)

Yinheng Li (Microsoft)

Aayush Gupta (University of Maryland)

HyoJung Han (University of Maryland)

Sevien Schulhoff (University of Maryland)

Pranav Sandeep Dulepet (University of Maryland)

Saurav Vidyadhara (University of Maryland)

Dayeon Ki (University of Maryland)

Sweta Agrawal (Instituto de Telecomunicações)

Chau Pham (University of Massachusetts Amherst)

Feileen Li (University of Maryland)

Hudson Tao (University of Maryland)

Ashay Srivastava (University of Maryland)

Hevander Da Costa (University of Maryland)

Saloni Gupta (University of Maryland)

Megan L. Rogers (Texas State University)

Inna Goncearenco (Icahn School of Medicine)

Giuseppe Sarli (Icahn School of Medicine, ASST Brianza)

Igor Galynker (Mount Sinai Beth Israel)

Denis Peskoff (Princeton)

Marine Carpuat (University of Maryland)

Jules White (Vanderbilt)

Shyamal Anadkat ( OpenAI)

The Department welcomes comments, suggestions and corrections. Send email to editor [-at-] cs [dot] umd [dot] edu.