Tianyi Zhou Aims to Bridge the Gap Between Humans and AI

Machine learning is responsible for some of the most significant advancements in technology that make use of artificial intelligence today—from the burgeoning industry of self-driving cars to virtual personal assistants, like Amazon’s Alexa and Apple iPhone’s Siri. However, there is still a long way to go in this field in order to close the divide between humans and machines.

Tianyi Zhou, an assistant professor of computer science, is working at the intersection of machine learning and natural language to make AI more human-like by teaching it to learn and make decisions like people do.

“Since my first day of studying machine learning, I have been impressed by its potential,” says Zhou, who holds an appointment in the University of Maryland Institute for Advanced Computer Studies (UMIACS). “However, I also gradually realized its limitations when deployed in realistic interactive environments and its overall lack of human learning strategies.”

He says that by bridging the gap between humans and machines in learning strategies and skills, aligning them better on user preferences and specific tasks, and making the AI agents safer and easier to control, the “amazing functionality of AI will be unlocked, and billions of people will be able to benefit from it.”

Applications of this research include a variety of industries, such as healthcare, education, social media, finance, agriculture, and engineering.

“Our overall goal is to develop efficient, versatile, and trustworthy hybrid intelligence based on the co-evolution between humans and machines,” says Zhou, who is also a core member of the University of Maryland Center for Machine Learning.

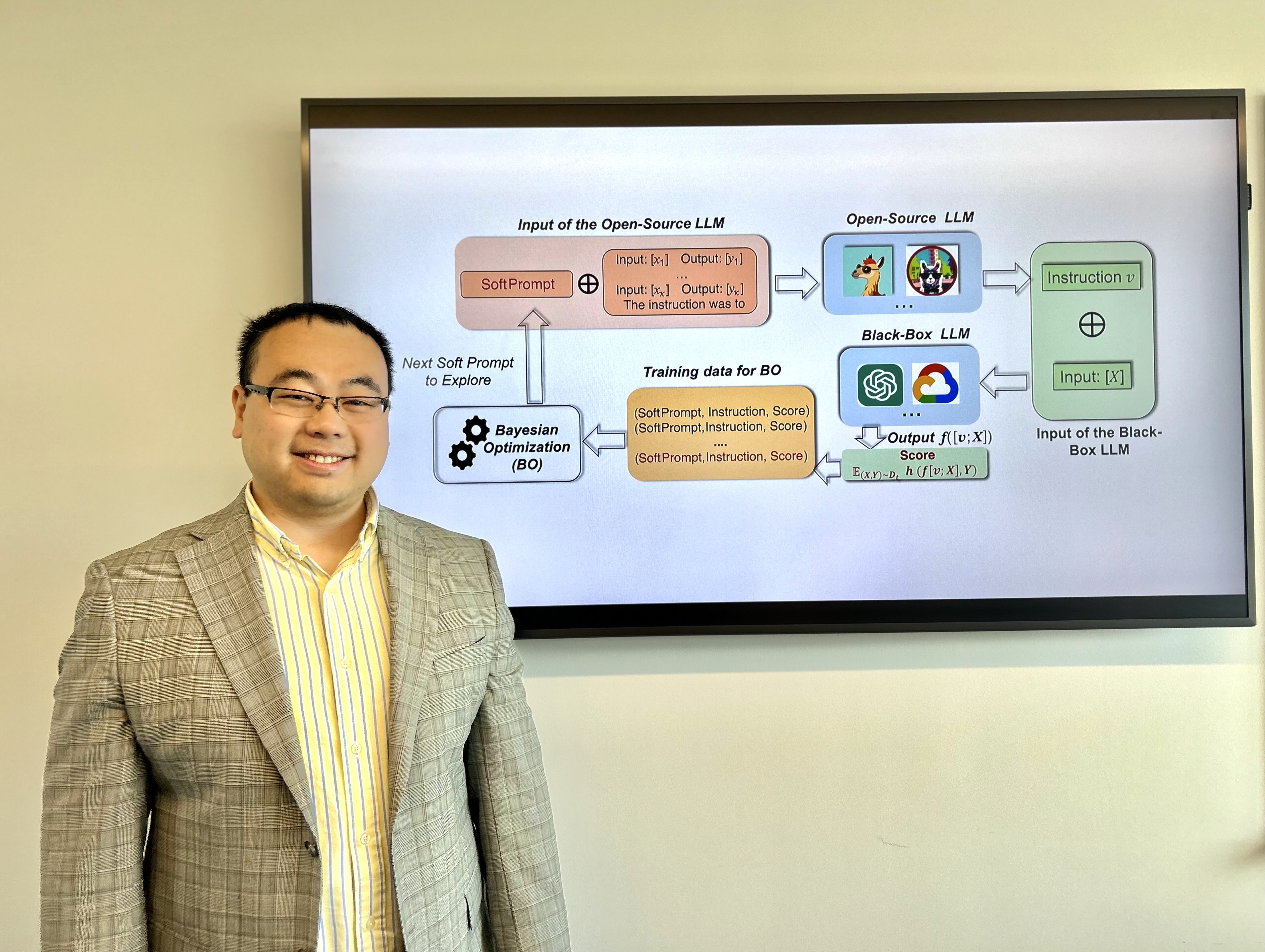

Some of his recent work has focused on controllable AI. Specifically, developing approaches that can monitor and precisely control the training and inference process of the model—preprocessing and selecting the data or tasks used to train the machine learning model in different learning stages—and finding a sequence of guidance to control the intermediate steps of inference or generation—so they can efficiently and accurately reach certain goals defined by humans.

Zhou says the controllability of AI models is especially important when they are used to develop AI agents interacting with humans, or in the real world to solve engineering or scientific problems.

He has also studied human-AI alignment, which is focused on ensuring that AI systems are developed and deployed in a way that lines up with human values and goals. Essentially, this boils down to making sure that advanced AI systems act in accordance with our ethical principles and multiple objectives.

Zhou says large language models (LLMs) or AI-generated content (AIGC) models can easily generate harmful, biased or violent content if you ask them to—for example, providing instructions to make a bomb or generating violent images and videos. They can also spontaneously generate irrelevant content to the human input, fabricate things that do not really exist or are even the opposite of reality, or simply provide false information confidently—they may tell you the current U.S. president is Donald Trump because it was trained on outdated data.

To make them safer and more reliable, Zhou and his team study how to train them to better obey the human-defined constraints, automatically remove any toxic or harmful content, continuously update their parametric memory with time-sensitive knowledge, and avoid generating misinformation and disinformation. To this end, he says they are looking into the controllability of LLMs and AIGC models—that is, how to accurately control these AI models to follow certain requirements or instructions.

Human-AI alignment is also necessary when it comes to creating personal assistants and AI for arts, avatars or virtual characters, he says.

“Existing human-AI alignment mainly focuses on fine-tuning AI models to generate content preferred by an average human but not a special individual,” Zhou says. “Instead, we are looking into human-AI alignment that aims to improve the generated content of AI models to be close to a single user's preference and intention.”

For example, when using AI models to build an agent or avatar in a virtual environment, we usually want the agent or avatar to not only look like us, but also speak and behave like us or the characters we would like to pretend to be.

Or when AI is used to generate stories about the same topic or entities, some users may prefer thrillers but some others would like to read science fiction.

“All these require a fine-grained alignment between the AI model and each individual,” Zhou says. “Of course, we cannot afford to train a ChatGPT from scratch for everyone, but we can develop efficient fine-tuning and editing methods that can modify a general-purpose AI model to align well with a single user’s personal preference and customize it for the user’s habits, styles and tasks.”

UMIACS’ computing clusters have been extremely helpful in his academic research as well as student projects in his classes to accomplish many computations that cannot easily be done on PCs and laptops.

These include fine-tuning LLMs with tens of billions of parameters, generation of training data using AI models, and distributed training of multiple models for hundreds to thousands of user tasks or data.

Zhou received his doctorate in computer science from the University of Washington in 2021. Before joining UMD in 2022, he was a visiting research scientist at Google.

—Story by Melissa Brachfeld, UMIACS communications group

The Department welcomes comments, suggestions and corrections. Send email to editor [-at-] cs [dot] umd [dot] edu.