AI chatbots lose money every time you use them. That is a problem.

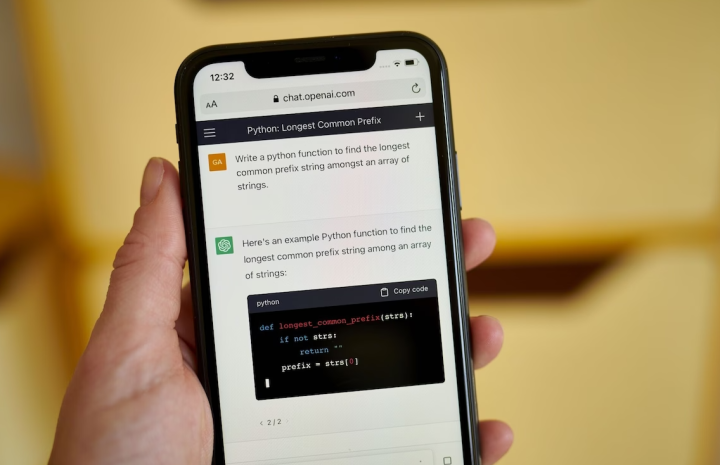

AI chatbots have a problem: They lose money on every chat. The enormous cost of running today’s large language models, which underpin tools like ChatGPT and Bard, is limiting their quality and threatening to throttle the global AI boom they’ve sparked.

Their expense, and the limited availability of the computer chips they require, are also constraining which companies can afford to run them and pressuring even the world’s richest companies to turn chatbots into moneymakers sooner than they may be ready to.

“The models being deployed right now, as impressive as they seem, are really not the best models available,” said Tom Goldstein, a computer science professor at the University of Maryland. “So as a result, the models you see have a lot of weaknesses” that might be avoidable if cost were no object — such as a propensity to spit out biased results or blatant falsehoods.

The tech giants staking their future on AI rarely discuss the technology’s cost. OpenAI (the maker of ChatGPT), Microsoft and Google all declined to comment. But experts say it’s the most glaring obstacle to Big Tech’s vision of generative AI zipping its way across every industry, slicing head counts and boosting efficiency.

Click HERE to read the full article from the Washington Post.

The Department welcomes comments, suggestions and corrections. Send email to editor [-at-] cs [dot] umd [dot] edu.