Optimizing Computational Dataflow with Machine Learning

What do personal computers (PCs), laptops, and smartphones have in common? They’re all general-purpose computing systems, meaning they can run a wide variety of tasks and offer incredible versatility. From browsing the internet to running complex statistical programs, these systems do it all.

While similar in their capabilities, these diverse computing platforms also share a notable limitation—their fixed hardware design. While this allows the devices to perform a range of operations, it also prevents them from being optimized for any one specific application. Consequently, they often use unnecessary computational steps to perform tasks, wasting resources and energy. In an era where computational demands are intensifying and energy conservation is a global concern, ensuring efficiency is essential.

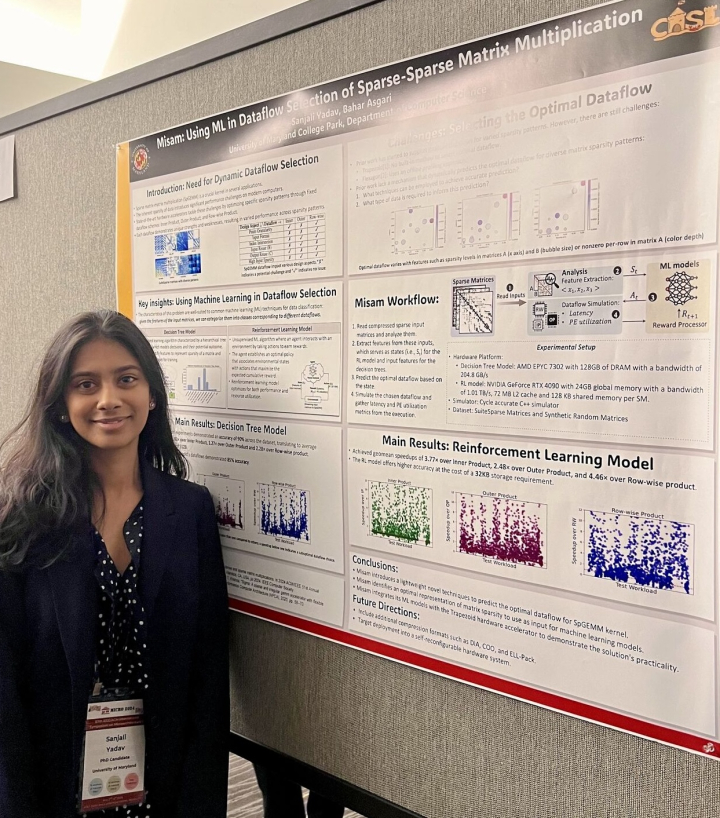

Sanjali Yadav, a first-year doctoral student in computer science at the University of Maryland, is developing a unique solution to this problem. Her innovative approach allows hardware to dynamically adapt to specific tasks, thereby enhancing dataflow efficiency and reducing energy consumption. Her research, supported by a Department of Energy (DOE) grant, earned first place in the graduate division of the Association for Computing Machinery Student Research Competition, held at the 2024 IEEE/ACM MICRO symposium last month in Austin, Texas.

Yadav is advised by Bahar Asgari, an assistant professor of computer science with an appointment in the University of Maryland Institute for Advanced Computer Studies (UMIACS). Asgari is principal investigator of the DOE grant, which supports research on reconfigurable hardware—technology designed to address inefficiencies in general-purpose computing systems.

Much of this work takes place in Asgari’s Computer Architecture & Systems Lab (CASL)—which Yadav is a part of—where a diverse group of researchers aim to develop efficient, scalable computing solutions.

Yadav’s contribution toward the lab’s purpose focuses on matrices—mathematical structures used to organize and manipulate data. A specific type, sparse matrices, are mostly made up of zeros. These sparse matrices allow for special operations called sparse matrix-matrix multiplication (SpGEMM), which skips over zeros to save memory and speed up computations. This efficiency makes sparse matrices essential for various fields, including scientific computing, graph analytics, and a specialized form of machine learning called neural networks.

But sparse matrices aren’t perfect—their irregular structures and unique patterns often hamper performance optimization. Computer scientists have come up with several specialized hardware accelerators to deal with this problem. However, because these devices are designed for specific patterns of matrices, they often perform poorly when matrix patterns deviate from what they were designed for.

“This lack of adaptability made people realize that we need a universal hardware that can deal with a range of matrix patterns and support different types of multiplication,” Yadav explains.

She realized that machine learning techniques—methods that teach computers how to learn and make decisions—could be the perfect way to solve this problem. She developed Misam, named after a star in the constellation Perseus, which relies in an approach that uses ML models to predict the best multiplication strategy for each input matrix. Her method delivers up to 50 times the speedup compared to state-of-the-art static hardware accelerators.

Click HERE to read the full article

The Department welcomes comments, suggestions and corrections. Send email to editor [-at-] cs [dot] umd [dot] edu.