Linux Networking Basics

A Short Guide for a Short Course

Michael Marsh

Contents

- Preface

- Chapter 1 Introduction

- Chapter 2 Network Overview

- Chapter 3 Connecting Hosts

- Chapter 4 Interactions Between Layers

- Chapter 5 Network Programming

- Chapter 6 Traffic

Preface

This book is intended as a companion for the University of Maryland course CMSC389Z, which is a three-week Winter Term course on Network Management and Programming in a Linux Environment. While it may be helpful outside of that context as a general introduction to some computer networking concepts, it is not meant to be anywhere close to complete.

Chapter 1 Introduction

This book was written as the text for a 3-week course Network Management and Programming in a Linux Environment at the University of Maryland. The material is very focused on the topics for this course, so there will necessarily be a lot that is omitted, both in breadth and depth.

1.1 Terminology and References

Before we get into any details, let’s begin by defining some terms:

- host – This is a computer, phone, or any other device connected to a network. We use this as a general term for anything on the network.

- network interface – This is something on a host that provides it access to a network. Every host will have at least one of these.

- device – This is another name for a network interface. Often used because it is shorter.

- traffic – This refers to data sent over a network. It will consist of many packets implementing various protocols.

- packet – This is a discrete unit of traffic. The network operates by moving individual packets from their sources to their destinations.

- protocol – This is a set of messages and rules that hosts use to meaningfully communicate. Protocols tell hosts how to “speak” to other hosts in a way that they will understand, and how to interpret those messages.

- network edge – This is the set of hosts that exchange data. Your phone and laptop, as well as the web servers you connect to, are all part of the network edge. There is some network infrastructure that is also considered part of the edge, such as your Wifi router.

- network core – This is the set of hosts that provide service for the network edge. These are owned and managed by large Internet Service Providers, and consist of routers (which we’ll see in Section 3.6), switches (which we won’t discuss, but bear some similarity to routers), and some other miscellaneous devices.

We’ll introduce other terms as needed, but these are the fundamental building blocks of any discussion of networking. We’re going to focus on hosts at the edge of the network, rather than the core.

You may find the following references helpful, both of which are available for free from https://www.cs.umd.edu/\( \sim \)mmarsh/books.html:

- Using the Bash Command Line – including common command-line utilities and scripting

- A General Systems Handbook – including numeric representations, git, Linux system administration, and networking commands

If you plan to do more network programming in C, the following references might be worth the investment:

- TCP/IP Sockets in C, by Michael J. Donahoo and Kenneth L. Calvert

- TCP Illustrated: The Protocols, Volume 1, 2nd Edition, by Kevin R. Fall and W. Richard Stevens

- UNIX Network Programming: Networking APIs: Sockets and XTI, Volume 1, by W. Richard Stevens

1.2 Some Linux Basics

You should familiarize yourself with Using the Bash Command Line, especially Chapters 1 through 3, 5, 7, and 9. You will likely want to refer back to these chapters frequently until you’ve internalized most of the information. Chapters 2, 3, and 5 of A General Systems Handbook will also be very useful, though we will expand on the material in Chapter 5 (Network Commands) in this book.

Another important thing to note when using Linux is how files encode

line endings. There are two common ASCII characters used to indicate

the end of a line: carriage return (\r) and newline

(\n). Newline is also sometimes called line feed. You will see

these abbreviated to CR in LF in much documentation. With two different

characters (representing different functions on a typewriter), what do you

use, and where?

This is where things get complicated. On Linux, line endings use newline

(LF). Macs also use newline now, but used to use carriage return (CR). Windows uses a carriage return followed by a newline (CRLF). The CRLF

encoding is also used by ASCII-based network protocols, like HTTP.

Why do we care about this, when this is a Linux-focused course? Because many students use Windows computers, their file editing

software will produce files with CRLF line endings. Some programs,

like the C compiler, handle these different encodings without

problems. Others, like the bash shell, insist on LF line

endings. There is a helpful utility, called dos2unix, that you

should install in order to convert files. If you are writing a bash

script, make sure you use dos2unix to ensure that it has the correct

line endings.

1.3 Course Structure

For the three-week course, the weeks will cover:

Chapter 2 Network Overview

In this chapter, we will explore the design of the network and the tools used to configure and examine it. We will begin with the high-level network model, then how pairs of hosts are connected, and finally how these are combined into a large distributed system.

2.1 The OSI Model

To understand the design of the network, it is important to familiarize ourselves with the Open Systems Interconnection (OSI) Model. In this model, we create multiple layers, each of which is reponsible for a particular type of service. These layers provide Application Programming Interfaces (APIs) to the layers above and below them.

Within a layer, we define and implement various protocols to handle that layer’s responsibilities. These protocols must adhere to established APIs, so that one protocol at a layer can be replaced with another without impacting the rest of the layers.

By organizing the protocols in this manner, we gain an abstraction of the network. We like abstractions, because they allow us to reason about individual components of a complex system, as well as replace pieces that no longer suit the needs at that layer.

The OSI Model divides the network stack into seven layers:

- Physical

This is how hosts and other devices are actually connected. Physical layer devices include ethernet cables, RF antennas, fiber optic cables, etc. It also includes how bits (or groups of bits) are encoded on those connections (voltages, durations, etc.).

- Datalink (or just Link)

This defines how devices group collections of bits into messages, called frames, each with a specified source and destination. Ethernet and WiFi are examples of Link layer protocols. A network at this layer is generally referred to as a subnet or local network.

- Internetworking (or just Network)

This defines how local networks are connected in a way that allows for world-wide communications. A single Network layer message, called a packet, might be comprised of one or multiple Link layer frames, but a single frame may only contain a single packet’s data. Most of the time when we talk about the Network layer, we are talking about version 4 of the Internet Protocol. When looking at a particular portion of the network, we often refer to subsets of it as subnets, even if we are still referring to the Network layer.

- Transport

The Network layer only defines host-to-host connections. The Transport layer adds multiplexing through at addition of ports, to support process-to-process connections. That is the only thing that the User Datagram Protocol (UDP) does, but the Transmission Control Protocol (TCP) adds reliability and in-order packet delivery as well.

- Session

Some protocols require using multiple Transport layer connections, either on different ports of a single host or to different hosts. When this is required, the Session layer coordinates these.

- Presentation

This layer handles data encoding and decoding, so that the data as sent “on the wire” is readable by the application, and vice-versa.

- Application

This is where users interact with the network, through an interface such as a web browser. There are many Application layer programs, both graphical and command-line-based.

The Session and Presentation layers are often omitted from networking

discussions, because they are used either less frequently or so

transparently that they appear to be missing or part of another layer. We will limit our discussion of these two layers by noting (this will

make more sense later) that the Session layer often appears in applications

such as Voice-over-Internet-Protocol (VoIP) which require coordination between

multiple Transport-layer protocols, and the Presentation layer is often

captured in functions such as htonl.

We typically characterize connections between hosts with several quantities:

- Bandwidth This refers to the speed at which data can be written to

and read from the connection. It is typically given in terms of bits per

second (

bps), with powers-of-10 prefixes such as kbps (\( 10^3 \)bps), Mbps (\( 10^6 \)bps), and Gbps (\( 10^9 \)bps). Note that the typical byte is an octet (8 bits), so you need to divide by 8 to obtain bytes per second, and bytes are usually expressed in powers-of-2 prefixes. - Latency This refers to the time it takes for a single bit written at the source to reach the destination. It is often given in milliseconds, but can be considerably longer.

- Bit-Error Rate All physical media are imperfect, and this refers to the average fraction (possibly, but not always, given as a percentage) of bits transmitted that are either flipped or undiscernable at the destination. It is often abbreviated BER. This does not include losses of data due to overwhelming the network with more data than it can handle.

- Throughput This refers to how quickly we can move data from its source to its destination. The most we could ever achieve is the connection bandwidth, but this is rarely attainable. It is often helpful to consider throughput for a specific layer, in which case the overhead of the lower layers reduces the throughput relative to the bandwidth by predictable amounts.

- Goodput This refers to practical application-layer throughput (the good throughput), which is what applications (and users) see.

2.2 Internet Protocol

The Internet Protocol (IP) has two major versions: IPv4 and IPv6. We will focus on IPv4, but where appropriate we will provide analogous IPv6 information. IP is a layer 3 (Internetworking) protocol, as you might guess from its name.

The purpose of IP is to forward data across a global network. This requires unique addresses for all hosts on the network (though we will see how this requirement can be loosened).

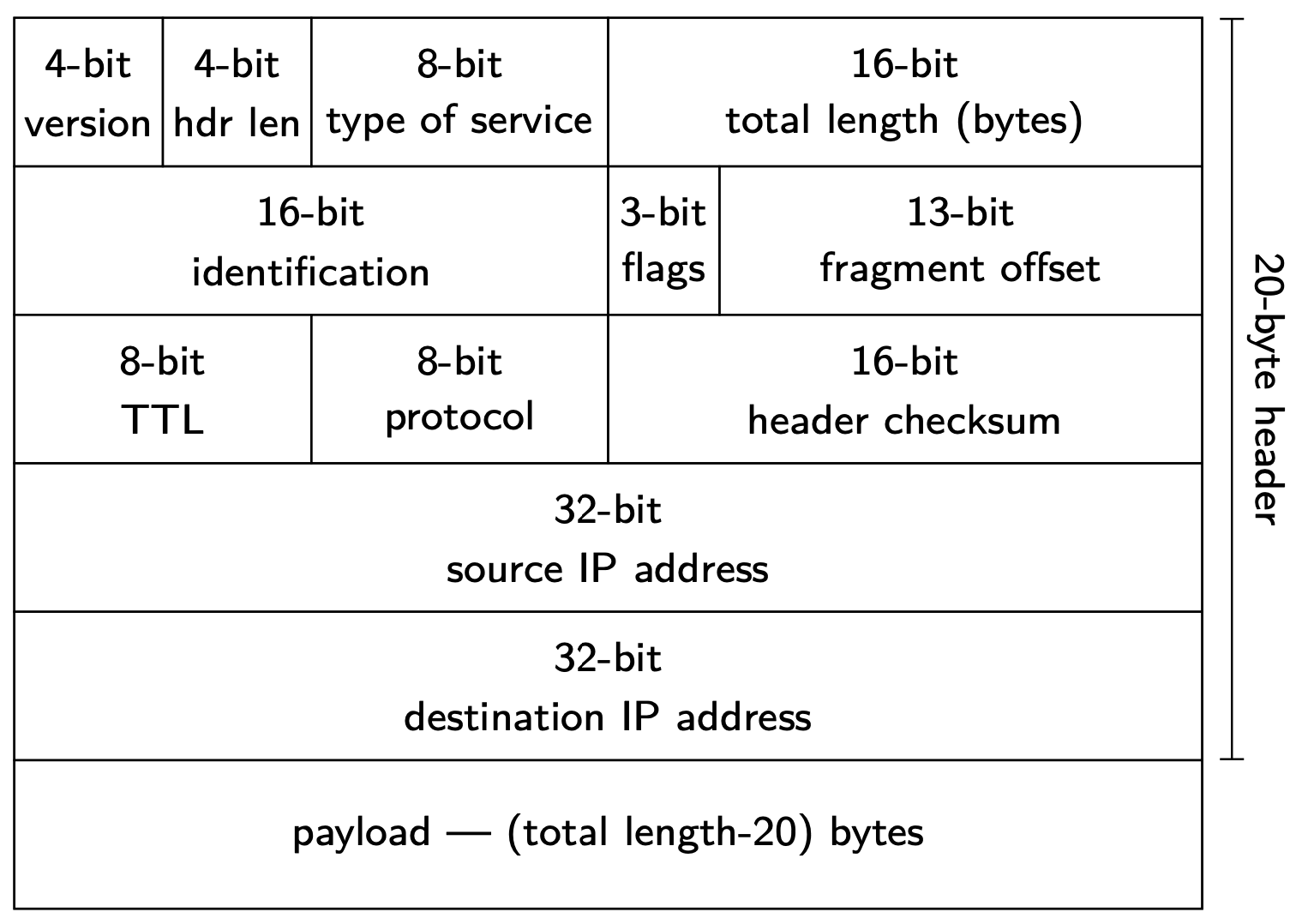

At layer 3, data is divided into packets. A packet has a header and a payload. The payload is the actual data we are interested in delivering, while the header provides sufficient information to move the packet through the network.

IP performs two crucial functions: routing and forwarding. Routing involves distributed protocols that allows participants to create routing tables (also called forwarding tables). Forwarding is the method by which an IP participant determines what to do with a given packet: Is this the destination? Should the packet be forwarded, and to whom? Should the packet be discarded (dropped)?

Hosts that perform routing and forwarding are called routers (because they route traffic). These are only required to implement OSI layers 1–3, though many provide extra functionality for remote administration or run on general-purpose computers. End-hosts (such as your laptop, phone, smart thermostat, or even some lightbulbs) can only be the source or destination for a packet — they do not normally provide forwarding.

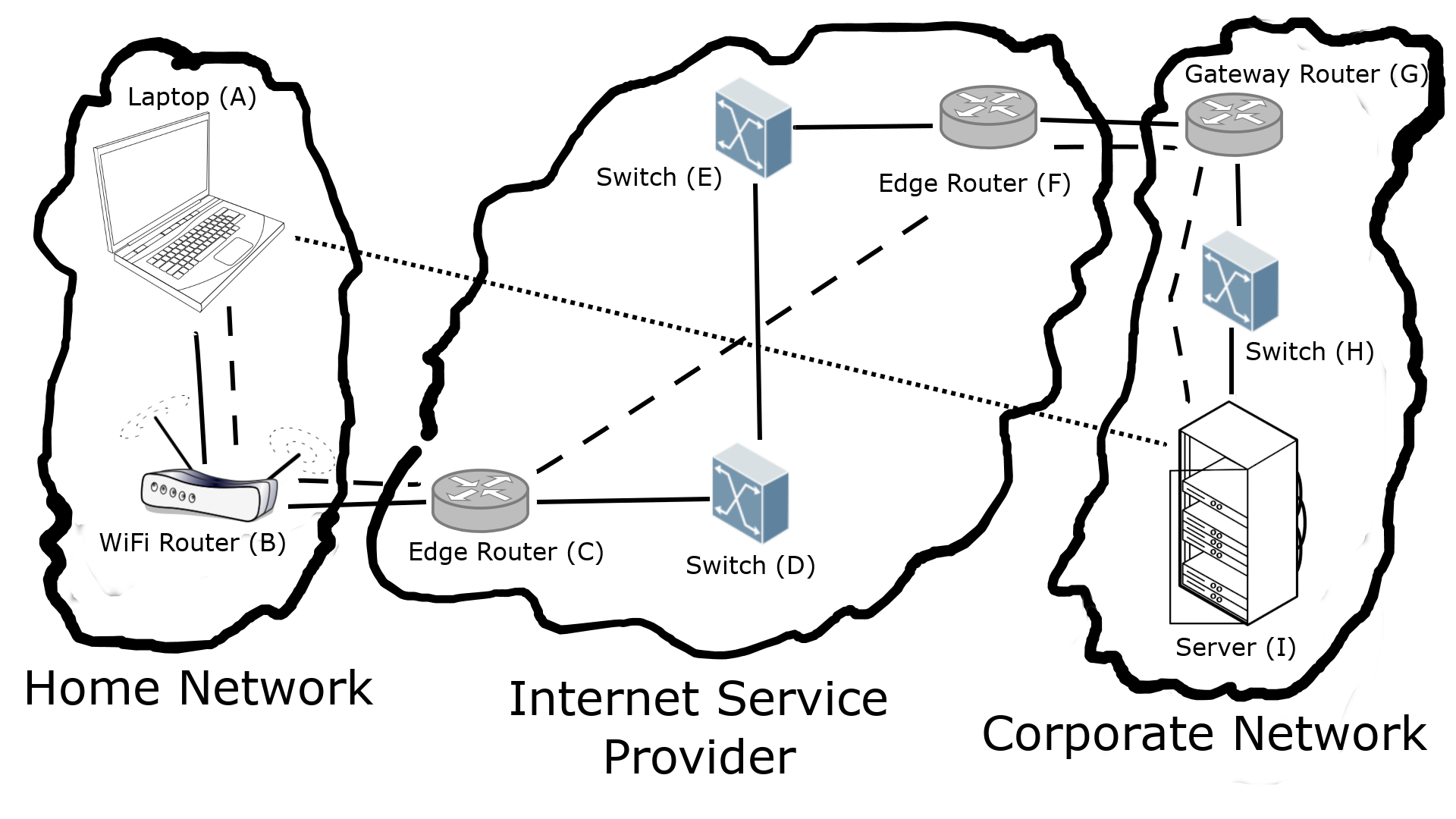

An example of network connection is shown in Figure 2.1. Here we have three networks: a typical home network on the left, an Internet Service Provider (ISP) network in the middle, and a corporate network on the right. Solid lines are layer 1 and layer 2 connections; there might be other layer 1 devices, like repeaters,1 that are not shown. Laptop A is connected via WiFi to a WiFi router B. From the home network’s perspective, this router is the gateway router (GW). This router is connected via Ethernet to an ISP edge router C. C is connected to switch D, which is in turn connected to another switch E. E is then connected to edge router F, which connects to the corporate gateway router G. In the corporate network, G is connected to a switch H, which connects to the server I in the data center. Dashed lines show the layer 3 connections, which we refer to as an overlay on top of layer 2; this only connects the end hosts and the routers. The dotted line is the layer 7 overlay on top of layer 3, where the only hosts are the laptop and server. The ISP will likely refer to B and G as customer edge (CE) routers, and C and F as provider edge (PE) routers.

2.3 Naming

Internet Protocol requires hosts to have unique names (with some

caveats). These names are 32-bit integers, or four bytes (usually

referred to as octets in networking, because there are 8 bits in

each). We generally write these in dotted-decimal notation, so while

it is perfectly valid to refer to a host’s address as 2148040452,

we would generally write it as 128.8.127.4.

How do we get the dotted-decimal form? If we write 2148040452 in

hexadecimal, it is 0x80087f04. An octet is two hexadecimal

characters, so breaking this down gives us 80 08 7f 04. If we then

write these hexadecimal values in decimal, with a dot between octets,

we get 128.8.127.4.

An IP network is divided into subnets that are managed by different authorities. We specify these subnets using Classless Inter-Domain Routing (CIDR) notation. A CIDR block comprises as base address and a prefix length, separated by a slash. The prefix length tells you how many bits in the base address specify the subnet. That is, any address with the same first prefix-length bits as the base address is part of the same network.

To make this clearer, let’s take a look at the subnet 128.8.0.0/16

(this is University of Maryland’s network). Here, the /16 (read

“slash-16”) tells us that the first two octets of 128.8.0.0 specify

the subnet. In this case, that’s the 128.8 part, so any IP address

beginning with 128.8 is part of this network.

Subnets can be subdivided further, and an individual host is sometimes

treated as a /32 CIDR block. Each subdivision of a network typically

denotes hosts that are physically closer together, though there are

cases where this is not necessarily true (such as with Virtual Private

Networks). Forwarding proceeds by moving a packet to the CIDR block

containing the destination address with the longest-possible prefix

length. The forwarding table tells a router which outgoing connections

can receive which CIDR blocks.

3.1 Transport Layer and Multiplexing

The simplest model for an end host is a computer with a single network connection. This might be an ethernet cable, a WiFi radio, or something else. If only one process on a host could access the network at a single time, we would not have much of the functionality that we currently rely on. This was, in fact, the case for many home computer users who used to use a telephone modem to dial into a bulletin board system (BBS). To get around this limitation, we need some way to multiplex connections over a single physical connection.

Layer 4, the Transport Layer, provides us with multiplexing through the use of ports. You can think of ports as an additional address within the computer that identifies not just a particular process, but a specific socket within a process. We will discuss sockets in more detail in Chapter 5.

For our purposes, we will only consider the two most common Transport Layer protocols for most applications: UDP and TCP. Each of these provides port-based multiplexing. These are not the only Transport Layer protocols, and there are even protocols that do not involve port-based multiplexing.

UDP is the User Datagram Protocol. UDP adds a very simple header with 16-bit port numbers on the source and destination side. The destination port enabled the destination host to pass the payload of the UDP packet, the datagram, to the appropriate process. While a single datagram can (but often does not) contain multiple application-layer messages, a single message must be contained within a single datagram. The sender port enables the receiving process to send a reply. Otherwise, UDP adds no additional functionality.

TCP is the Transmission Control Protocol. TCP has a much more complex header, which we will not discuss in detail here (see Chapter 6). For the moment, all that we care about is that, like UDP, it contains source and destination ports. In addition, it contains information that allows each end to determine when packets have been dropped, how fast data can be sent, and how to properly order the data received. Along with retransmission of dropped packets, this means TCP provides a reliable in-order data stream.

How do we decide which of these to use in an application? If we have reasonably short, self-contained messages, then UDP is often sufficient. The advantage of using UDP for these is that it has a much lower overhead cost than TCP. We can add our own reliability at the application layer, if we need it. If we have more continual data, then TCP is often a better choice, since we are guaranteed to have our data streamed to the application layer in the correct order with nothing missing, and taking maximum advantage of the network connection. However, we sometimes want to use UDP for streaming data. This typically occurs in applications where the loss of data is less important than timely delivery. Consider streaming voice: If we lose a packet, we have a very small amount of voice data that is lost, which might not even be noticeable. On the other hand, if we require the lost packet to be detected and retransmitted, we might end up with a very noticeable delay on the receiver’s side, which will only grow as additional packets are dropped and retransmitted.

To summarize:

- Simple single-message protocols are often best with UDP.

- Streaming data protocols where reliability is important are often best with TCP.

- Streaming data protocols where latency is important are often best with UDP.

3.2 netcat — Simple Data Exchange

You are hopefully familiar with the cat command, which reads data

from standard input or specified files and concatenates it to

standard output. There is a network version of this, called netcat. While you would not ordinarily use this to, say, browse the web, it

can be very helpful when testing a new protocol or implementation.

There are two programs commonly referred to as netcat: nc and

ncat. Which one you have installed depends on your operating

system. ncat is provided by nmap.org, as

source code or binary for most operating systems. The options differ

slightly, and even basic usage depends on which version you are using.

Let’s begin by using netcat as a simple web browser. Specifically, we are going to use it to do a search using Google for “netcat”. Here, the two versions are almost the same. Either of the following will work:

nc www.google.com 80

ncat www.google.com 80

Both of these connect to the host www.google.com on port 80 (the standard

port for HTTP), and then wait for input. Run one of these, and then type:

GET /search?q=netcat

and hit Enter or Return. You should see the raw HTML returned by

Google. Here we see the first difference: nc will exit immediately

after receiving the response, while ncat will wait for additional

input.

Netcat can operate as a client or a server. A server is a program that waits for connections requesting its service, and responds to them; a web server is probably the most familiar to people currently. The programs connecting to those servers are clients; think of a web browser. We will start with netcat as a client (as we did above), but first let’s look at some general options common to both client and server operation.

| Option | Meaning | Notes |

-4 |

Use only IPv4 | |

-6 |

Use only IPv6 | |

-u |

Create UDP connections | The default is TCP |

-v |

Verbose output |

We have seen some basic client operation already. The client behavior can be summarized as:

- Connect to a server.

- Send a message.

- Wait for a response.

The options we provide affect this behavior. We have already seen the

-u option, to make a UDP connection instead of a TCP connection. As

a client, you must always supply a hostname and a port

(www.google.com and 80, in our previous example). While there are

client-specific options, you are less likely to use them. See the manpage

for your particular version of netcat for details.

While it is possible to use netcat as a client generally, it is more useful

for debugging servers. Since many protocols are binary, you will often

want to store the messages to send in files, and redirect them using the

shell’s < operator:

nc example.com 1234 < message

This is also useful for text-based protocols, to save typing.

Just as running netcat as a client is useful for debugging servers, running netcat as a server can help you debug clients. It is also useful if you just need to capture messages from a client. We will see other ways to capture message in Chapter 6, but if all you need is the application-layer payload, a netcat server could be all that you need.

There are more options for running a server, and this is where the

differences between nc and ncat are more noticeable.

| Option | Meaning | Notes |

-l |

Listen for connections | Basic server option |

-p <port> |

Listen on port <port> |

Only for ncat, nc just takes the port as a regular option |

-k |

Keep the socket open | Don’t exit after the initial client disconnects |

Here are examples of how you would start a netcat server listening on port 1234:

nc -l 1234

ncat -l -p 1234

If you run either of these and then run (in a separate terminal)

nc 127.0.0.1 1234

you can type on one terminal and see it appear on the other. This

works in either direction. When you close one side (with Ctrl-C or

Ctrl-D), both sides will close.

3.3 Standard Ports and Services

There are a lot of common network protocols, such as HTTP, SMTP (email), SSH, and DNS. Whether they use TCP or UDP, these have standardized ports that all applications supporting those protocols should use by default. If you’re implementing one of these applications, you need to know these standard ports. Additionally, you might be logging attempted connections to your host, and want to know what possible attackers are attempting to connect to.

On Posix systems, such as Linux or MacOS, these standard ports are

enumerated in the file /etc/services. A typical line might look like

http 80/tcp www www-http # World Wide Web HTTP

The first column tells us the protocol, which in this case is HTTP

(Hypertext Transfer Protocol). The next column tells us the port (80)

and layer 4 protocol (TCP) for this service. The remainder of the line

contains common names for the service, with general comments following

the # comment character.

You are not likely to need to refer to this very often, but it is useful to know where to find this information if you need it.

3.4 Finding Active Ports

The typical modern operating system has a lot of services running that receive messages over the network. It also tends to send a lot of messages, both for user-initiated actions as well as background tasks. All of these employ network ports, so it is useful to be able to see what ports are in use.

The standard Linux command to view ports is netstat. Technically,

this shows the sockets that are in use, which can include local

(non-network) sockets. The simplest usage is

root@efcc0d32f10f:/# netstat

Active Internet connections (w/o servers)

Proto Recv-Q Send-Q Local Address Foreign Address State

Active UNIX domain sockets (w/o servers)

Proto RefCnt Flags Type State I-Node Path

This is running on a docker container with no active connections. It does, however, have services listening:

root@efcc0d32f10f:/# netstat -a

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State

tcp 0 0 localhost:mysql *:* LISTEN

tcp 0 0 *:http *:* LISTEN

Active UNIX domain sockets (servers and established)

Proto RefCnt Flags Type State I-Node Path

unix 2 [ ACC ] STREAM LISTENING 53476 /var/run/mysqld/mysqld.sock

The -a flag tells netstat to list all sockets, whether there are

active connections or not. We can see that there are two network

sockets running, and one Unix (non-network) socket. The first network

socket is listening for connections from localhost only, on the

standard TCP port used by MySql. The second network socket is listening

for HTTP connections from anywhere over TCP.

If we look in /etc/services, we can find out what these ports are:

root@efcc0d32f10f:/# grep mysql /etc/services

mysql 3306/tcp

mysql 3306/udp

mysql-proxy 6446/tcp # MySQL Proxy

mysql-proxy 6446/udp

root@efcc0d32f10f:/# grep http /etc/services

# Updated from http://www.iana.org/assignments/port-numbers and other

# sources like http://www.freebsd.org/cgi/cvsweb.cgi/src/etc/services .

http 80/tcp www # WorldWideWeb HTTP

http 80/udp # HyperText Transfer Protocol

https 443/tcp # http protocol over TLS/SSL

https 443/udp

http-alt 8080/tcp webcache # WWW caching service

http-alt 8080/udp

We can identify the relevant lines (exact service match, and TCP) as

mysql 3306/tcp

http 80/tcp www # WorldWideWeb HTTP

This is a bit cumbersome, so we will add the -n flag, to leave numbers

as numbers, and not look them up:

root@efcc0d32f10f:/# netstat -an

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State

tcp 0 0 127.0.0.1:3306 0.0.0.0:* LISTEN

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN

Active UNIX domain sockets (servers and established)

Proto RefCnt Flags Type State I-Node Path

unix 2 [ ACC ] STREAM LISTENING 53476 /var/run/mysqld/mysqld.sock

Here we see not only that the ports are given numerically, but so are

localhost (127.0.0.1) and * (0.0.0.0). This will also tend to

be faster, especially once we are dealing with remote connections,

where we would have to perform a reverse DNS lookup to map IP

addresses to host names.

We can also limit ourselves to TCP sockets:

root@efcc0d32f10f:/# netstat -ant

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State

tcp 0 0 127.0.0.1:3306 0.0.0.0:* LISTEN

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN

UDP sockets:

root@efcc0d32f10f:/# netstat -anu

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State

or both:

root@efcc0d32f10f:/# netstat -antu

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State

tcp 0 0 127.0.0.1:3306 0.0.0.0:* LISTEN

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN

We can also request the process name and ID for the sockets:

root@efcc0d32f10f:/# netstat -antup

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 127.0.0.1:3306 0.0.0.0:* LISTEN -

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 479/apache2

We do not always get this information, though.

An important thing to note is that we can list the arguments separately or combined, and the order does not matter:

root@efcc0d32f10f:/# netstat -a -n -t -u

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State

tcp 0 0 127.0.0.1:3306 0.0.0.0:* LISTEN

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN

root@efcc0d32f10f:/# netstat -aunt

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State

tcp 0 0 127.0.0.1:3306 0.0.0.0:* LISTEN

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN

root@efcc0d32f10f:/# netstat -tuna

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State

tcp 0 0 127.0.0.1:3306 0.0.0.0:* LISTEN

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN

root@efcc0d32f10f:/# netstat -taun

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State

tcp 0 0 127.0.0.1:3306 0.0.0.0:* LISTEN

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN

It is also worth noting that some versions of netstat behave

slightly differently. On MacOS, for example, the -u flag specifies

Unix sockets, not UDP.

We can also see what an active connection looks like:

root@efcc0d32f10f:/# nc localhost 80

root@efcc0d32f10f:/# netstat -taun

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State

tcp 0 0 127.0.0.1:3306 0.0.0.0:* LISTEN

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN

tcp 0 0 127.0.0.1:80 127.0.0.1:35226 SYN_RECV

tcp 0 0 127.0.0.1:35226 127.0.0.1:80 ESTABLISHED

The first command is run in a separate shell. We can see that there

are two new sockets, one on port 80 that has received a connection

from port 35226 and another on port 35226 that has established a

connection to port 80. A normal interaction via a web browser would

have both of these sockets in the ESTABLISHED state, which is part

of the TCP protocol.

| Argument | Meaning |

-a |

Show all sockets |

-t |

Show TCP sockets |

-u |

Show UDP sockets |

-n |

Show numbers, not names |

-p |

Show PID and process name |

3.5 Local Networking

Local networking is handled primarily by layer 2, the datalink layer. Linux interacts with layer 2 through devices. These might be Ethernet cards, WiFi radios, cellular radios, or even internal virtual devices. In practice, we need to know very little about the layer 2 specifics, since the Linux kernel abstracts this for us. Since applications more directly work with layer 3, we will also see how this interacts with layer 2 at the local level.

3.5.1 Examining Devices with ifconfig (The Old Way)

We can examine all of the existing devices on our system with the

command ifconfig. By default, it will only show the active

devices. The -a option will list all devices. Here is an example, from the

cmsc389z docker image:

crow@d1b9b82f7557:/$ ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.17.0.2 netmask 255.255.0.0 broadcast 172.17.255.255

ether 02:42:ac:11:00:02 txqueuelen 0 (Ethernet)

RX packets 15 bytes 1226 (1.2 KB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

loop txqueuelen 1000 (Local Loopback)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

crow@d1b9b82f7557:/$

crow@d1b9b82f7557:/$ ifconfig -a

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.17.0.2 netmask 255.255.0.0 broadcast 172.17.255.255

ether 02:42:ac:11:00:02 txqueuelen 0 (Ethernet)

RX packets 15 bytes 1226 (1.2 KB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ip6tnl0: flags=128<NOARP> mtu 1452

unspec 00-00-00-00-00-00-00-00-00-00-00-00-00-00-00-00 txqueuelen 1000 (UNSPEC)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

loop txqueuelen 1000 (Local Loopback)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

tunl0: flags=128<NOARP> mtu 1480

tunnel txqueuelen 1000 (IPIP Tunnel)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

Consider the following lines for eth0 (Ethernet device 0):

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.17.0.2 netmask 255.255.0.0 broadcast 172.17.255.255

ether 02:42:ac:11:00:02 txqueuelen 0 (Ethernet)

The first line tells us that the device is “up” and “running”, meaning it is ready to send and receive traffic. It also tells us that the device supports both broadcast (send a message to every host on the local network) and multicast (send a message to a specific set of hosts, possibly on other networks). Further, it tells us that this device supports data frames up to 1500 bytes (the Maximum Transmission Unit, or MTU).

The second line tells us the internet address for this device — each device will have its own address. It also specifies a netmask, which we can use to identify IP addresses on this same local network by performing a bitwise-AND of the address and netmask. If these values are equal, then they are on the same subnet. The broadcast address is the highest address in the subnet, and messages sent to this address will be delivered to the entire subnet.

The third line tells us the layer 2 (ether) address for the device. This is often called the MAC (Media Access Control) address. While

IP addresses are written in dotted-quad format, MAC addresses are

written in colon-separated hexadecimal, where the colons separate

individual bytes.

3.5.2 Examining Devices with iproute2 (The New Way)

While ifconfig is a useful command to know, and still commonly

referenced, we are not going to use it further. There is a newer

networking package called iproute2, that was introduced in 2006, and

this is what we will use. The primary command in this package is

ip, which has a number of subcommands. We will begin with the ip

link subcommand, which is analogous to ifconfig:

crow@d1b9b82f7557:/$ ip link

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: tunl0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

3: ip6tnl0@NONE: <NOARP> mtu 1452 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/tunnel6 :: brd ::

496: eth0@if497: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

We can look at a specific device with ip link show:

crow@d1b9b82f7557:/$ ip link show eth0

496: eth0@if497: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

We can see most of the information that ifconfig eth0 would show us,

with additional information. Some of this will make more sense soon.

What we do not see is the layer 3 information. We can view this with

ip address show:

crow@d1b9b82f7557:/$ ip address show eth0

496: eth0@if497: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

Now we see the rest of the information we looked at for ifconfig, but

instead of a netmask, we get the IP address in CIDR notation.

We can see additional layer 3 information for our local network with

the ip route subcommand:

crow@475283dd6ec2:/$ ip route

default via 172.17.0.1 dev eth0

172.17.0.0/16 dev eth0 proto kernel scope link src 172.17.0.2

This tells us that the default route, for anything not specified by an

explicit route, is using the gateway router with address 172.17.0.1,

and which is reachable via the eth0 device. Further, it tells us

that any destination in the 172.17.0.0/16 CIDR block is reached via

the eth0 device, and our source address for this is

172.17.0.2. Note that this is the same address that appears in ip

address show eth0.

3.5.3 Creating Virtual Devices

At the beginning of this chapter, we mentioned virtual devices at

layer 2. The most common virtual device is loopback, and you might

have noticed this in the output of ifconfig and ip link. The

device name is usually lo or lo0. This is a virtual device for

delivering layer 3 traffic between processes on the same host, which

has the special name localhost. It has a much simpler frame

structure than Ethernet, and is extremely fast (high bandwidth, low

latency).

There are other virtual devices often found on a host. Tunnels provide virtual devices that encapsulate data from one protocol in another, either to translate between protocols (like forwarding IPv6 packets through an IPv4 network) or to make traffic appear to flow through a different local network (such as a Virtual Private Network). Virtual devices are also used to move data between a virtual machine or container’s network and the host’s network.

We can create our own virtual devices using the ip link command by adding

a new link of type veth (virtual ethernet). This must be done as the

root user, as must most of the rest of the commands in this section. root@eaa156967336:/# ip link add v0 type veth peer name v1

root@eaa156967336:/# ip link show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: tunl0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

3: ip6tnl0@NONE: <NOARP> mtu 1452 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/tunnel6 :: brd ::

6: v1@v0: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 66:59:d5:2d:62:7b brd ff:ff:ff:ff:ff:ff

7: v0@v1: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 16:b6:e1:98:ed:b9 brd ff:ff:ff:ff:ff:ff

16: eth0@if17: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

The ip link add command adds a new device, in this case named v0. We specify veth as the type, which then requires us to define a peer

device locally, which we name v1 with the peer name argument. Note

that we now have two devices, which are shown as v0@v1 and v1@v0. The

actual device name is given before the @, and the peer device is given

after the @:

root@eaa156967336:/# ip link show v0

7: v0@v1: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 16:b6:e1:98:ed:b9 brd ff:ff:ff:ff:ff:ff

What have we actually done here? We have essentially created a virtual ethernet cable (Figure 3.1). Technically, we have actually created a cable with a virtual NIC on either end, but this cable analogy will help us understand the structure we are building.

These devices are, by default, created in the down state. That is, they

are not ready to send or receive data. In order to do this, we must first

add IP addresses to the devices:

root@eaa156967336:/# ip addr show v0

7: v0@v1: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 16:b6:e1:98:ed:b9 brd ff:ff:ff:ff:ff:ff

root@eaa156967336:/#

root@eaa156967336:/# ip addr add 1.2.3.4/32 dev v0

root@eaa156967336:/# ip addr show v0

7: v0@v1: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 16:b6:e1:98:ed:b9 brd ff:ff:ff:ff:ff:ff

inet 1.2.3.4/32 scope global v0

valid_lft forever preferred_lft forever

We see that initially there is no address set for device v0. We then

run ip addr add, after which the address has been set. Note that we

have to specify the address as a /32 CIDR block, and the device is

given as the last option. Now we can bring the link up:

root@eaa156967336:/# ip link set v0 up

root@eaa156967336:/# ip link show v0

7: v0@v1: <NO-CARRIER,BROADCAST,MULTICAST,UP,M-DOWN> mtu 1500 qdisc noqueue state LOWERLAYERDOWN mode DEFAULT group default qlen 1000

link/ether 16:b6:e1:98:ed:b9 brd ff:ff:ff:ff:ff:ff

We cannot send data through this device until the other end of the link, the

peer v1, has also been configured and brought up:

root@eaa156967336:/# ip addr add 1.2.3.5/32 dev v1

root@eaa156967336:/# ip link set v1 up

root@eaa156967336:/# ip addr show v1

6: v1@v0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 66:59:d5:2d:62:7b brd ff:ff:ff:ff:ff:ff

inet 1.2.3.5/32 scope global v1

valid_lft forever preferred_lft forever

At this point, we essentially have connected the two devices as shown in Figure 3.2. We will see how to add forwarding routes to these devices and make them behave like physical links in Section 3.7.

3.6 End-to-End Connections

We have already seen how we can use netcat to send data across a connection, and this is certainly one way verify that a network connection is active. That requires a specific application to run at each end, however, so it is more a test of a data protocol than connectivity.

If what we want to test is whether it is possible to send any data between two hosts, we want something lower-level that is (almost) always present. Fortunately, there is a protocol that can help us with this, called the Internet Control Message Protocol, or ICMP.

ICMP has a number of uses. Mostly, it is used by the network to signal problems, such as a subnet or host being unreachable. All hosts implementing IP must also implement at least some of the ICMP protocol. It also has a pair of messages called Echo Request and Echo Response. We collectively refer to these as ping.

We can use the command ping to send a series of ICMP Echo Requests

to a remote host, and wait for the corresponding responses. ping

sends a packet every second, and records the round-trip-time (RTT)

which is the sum of the one-way latencies. It also keeps track of how

many requests do not receive responses, providing a measure of the

packet loss on the connection.

root@eaa156967336:/# ping 8.8.8.8

PING 8.8.8.8 (8.8.8.8) 56(84) bytes of data.

64 bytes from 8.8.8.8: icmp_seq=1 ttl=37 time=18.8 ms

64 bytes from 8.8.8.8: icmp_seq=2 ttl=37 time=21.5 ms

64 bytes from 8.8.8.8: icmp_seq=3 ttl=37 time=11.5 ms

64 bytes from 8.8.8.8: icmp_seq=4 ttl=37 time=13.4 ms

64 bytes from 8.8.8.8: icmp_seq=5 ttl=37 time=13.8 ms

64 bytes from 8.8.8.8: icmp_seq=6 ttl=37 time=16.1 ms

64 bytes from 8.8.8.8: icmp_seq=7 ttl=37 time=19.9 ms

64 bytes from 8.8.8.8: icmp_seq=8 ttl=37 time=20.6 ms

64 bytes from 8.8.8.8: icmp_seq=9 ttl=37 time=13.0 ms

64 bytes from 8.8.8.8: icmp_seq=10 ttl=37 time=16.2 ms

64 bytes from 8.8.8.8: icmp_seq=11 ttl=37 time=18.4 ms

64 bytes from 8.8.8.8: icmp_seq=12 ttl=37 time=19.3 ms

--- 8.8.8.8 ping statistics ---

12 packets transmitted, 12 received, 0% packet loss, time 11037ms

rtt min/avg/max/mdev = 11.514/16.865/21.451/3.187 ms

Since the RTT varies from packet to packet, ping presents some

statistics at the end. Roughly 60% of packets are expected to have

RTTs within one standard deviation (mdev) of the average (avg). We can also limit the number of ping requests with the -c option. See the documentation for more details.

Not all hosts will respond to ping requests, so sometimes we will see 100% packet loss:

root@eaa156967336:/# ping 2.3.4.5

PING 2.3.4.5 (2.3.4.5) 56(84) bytes of data.

--- 2.3.4.5 ping statistics ---

7 packets transmitted, 0 received, 100% packet loss, time 6162ms

In this case, we do not know whether there is no host with address

2.3.4.5, whether it is not responding to pings, or if there is a

problem with the connection.

We have another tool that can shed some light on this, called

traceroute. This takes advantage of a field in the IP header called

Time-to-Live, or TTL. Each router that receives a packet decrements

the value of this field, and if it reaches 0 the packet is

discarded. The main purpose of this is to prevent packets from

propagating indefinitely in the event of a routing loop. This occurs

when a router A forwards a packet to destination d, and some

router B further along the forwarding path sends it back to

A. When the TTL reaches 0, the router discarding the packet sends an

ICMP TTL Exceeded message back to the source.

How does traceroute use the TTL? It begins by sending a packet to

the destination with a TTL of 1. This causes the first router to send

a TTL Exceeded message back, which includes the router’s IP address as

the source. It then increases the initial TTL by 1, in order to learn

the IP address of the second router. This continues until either the

destination responds or a certain number of failures are observed. root@eaa156967336:/# traceroute 8.8.8.8

traceroute to 8.8.8.8 (8.8.8.8), 30 hops max, 60 byte packets

1 172.17.0.1 (172.17.0.1) 2.479 ms 0.016 ms 0.006 ms

2 Fios_Quantum_Gateway.fios-router.home (192.168.1.1) 4.587 ms 4.861 ms 5.249 ms

3 lo0-100.WASHDC-VFTTP-368.verizon-gni.net (72.83.250.1) 15.181 ms 14.913 ms 14.952 ms

4 100.41.32.46 (100.41.32.46) 21.881 ms 21.711 ms 23.656 ms

5 0.ae1.GW16.IAD8.ALTER.NET (140.222.3.87) 24.254 ms 0.ae2.GW16.IAD8.ALTER.NET (140.222.3.89) 15.191 ms 0.ae1.GW16.IAD8.ALTER.NET (140.222.3.87) 24.418 ms

6 204.148.170.158 (204.148.170.158) 23.847 ms 9.642 ms 9.502 ms

7 * * *

8 142.251.70.110 (142.251.70.110) 12.857 ms dns.google (8.8.8.8) 11.014 ms 10.285 ms

What we see on each line is the TTL, the IP address (and possibly

hostname) of the router at that distance, and then three round-trip

times. Why three? Because traceroute actually repeats each distance

probe three times, to show the statistical variation. We also

sometimes see multiple router addresses on a line. That is because

there are often multiple paths from a source to a destination, so we

do not always see the same routers along the way. This also means that

just because two routers appear on adjacent lines in the traceroute

output, that does not mean they are directly connected.

Let’s see our failed ping:

root@eaa156967336:/# traceroute 2.3.4.5

traceroute to 2.3.4.5 (2.3.4.5), 30 hops max, 60 byte packets

1 172.17.0.1 (172.17.0.1) 0.073 ms 0.013 ms 0.009 ms

2 Fios_Quantum_Gateway.fios-router.home (192.168.1.1) 9.976 ms 10.308 ms 10.273 ms

3 lo0-100.WASHDC-VFTTP-368.verizon-gni.net (72.83.250.1) 22.969 ms 23.104 ms 23.118 ms

4 100.41.32.44 (100.41.32.44) 23.456 ms 100.41.32.46 (100.41.32.46) 23.730 ms 23.492 ms

5 0.ae1.GW12.IAD8.ALTER.NET (140.222.234.27) 24.603 ms 0.ae2.GW12.IAD8.ALTER.NET (140.222.234.29) 23.815 ms 0.ae1.GW12.IAD8.ALTER.NET (140.222.234.27) 24.313 ms

6 63.88.105.94 (63.88.105.94) 23.912 ms 11.488 ms *

7 81.52.166.172 (81.52.166.172) 113.875 ms 108.002 ms *

8 ae324-0.ffttr7.frankfurt.opentransit.net (193.251.240.102) 103.174 ms 104.060 ms 103.430 ms

9 * * *

10 * * *

29 * * *

30 * * *

Each * represents a packet sent for which no response was

received. The lines with * * * received no responses from those TTL

values. We have omitted most of these. From this, we can see that the

last router to receive our packet was 193.251.240.102. So, what does

this tell us? That depends on what we know about the destination and

the router. We will revisit this in Chapter 4.

There is an important consideration when looking at these IP

addresses. We noted in Section 3.5 that every device

on a host has its own IP address. A router has many network devices,

and the IP address we see is for the device that received the packet

and decremented the TTL to 0. That is, what we are learning are the

“near-end” IP addresses of the router. It is entirely possible that

the router at 193.251.240.102 has another device in, say,

2.3.0.0/16.

3.7 Network Namespaces

We have looked at the network stack (there is still a lot left to explore), and seen how each host on the network has its own stack. Linux also allows us to have multiple independent network stacks on the same host. It does this using network namespaces. Much like namespaces in programming languages, network namespaces make their devices (objects) visible only to other processes (methods) and devices within the same namespace.

The ip netns subcommand manages network namespaces in Linux. You can

view the existing namespaces:

root@eaa156967336:/# ip netns

root@eaa156967336:/# ip netns show

root@eaa156967336:/#

These commands are equivalent, and you can see that, by default, there are no namespaces defined.

We can add a namespace with ip netns add:

root@eaa156967336:/# ip netns add foo

root@eaa156967336:/# ip netns

foo

We can also delete them with ip netns del:

root@eaa156967336:/# ip netns del foo

root@eaa156967336:/# ip netns

root@eaa156967336:/#

Any program can be run in a namespace using ip netns exec:

root@eaa156967336:/# ip netns add foo

root@eaa156967336:/# ls

bin dev home lib32 libx32 mnt proc run srv tmp var

boot etc lib lib64 media opt root sbin sys usr

root@eaa156967336:/# ip netns exec foo ls

bin dev home lib32 libx32 mnt proc run srv tmp var

boot etc lib lib64 media opt root sbin sys usr

What does this show us? It shows that we are in the same filesystem,

with access to all of the files and programs. Let’s look at something

that differs:

root@eaa156967336:/# ip link

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: tunl0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

3: ip6tnl0@NONE: <NOARP> mtu 1452 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/tunnel6 :: brd ::

6: v1@v0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 66:59:d5:2d:62:7b brd ff:ff:ff:ff:ff:ff

7: v0@v1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 16:b6:e1:98:ed:b9 brd ff:ff:ff:ff:ff:ff

16: eth0@if17: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

root@eaa156967336:/#

root@eaa156967336:/# ip netns exec foo ip link

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: tunl0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

3: ip6tnl0@NONE: <NOARP> mtu 1452 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/tunnel6 :: brd ::

If we are not in the namespace, we have all of our normal devices

(including the veth link we created before). In the namespace, we

have fewer, all of which are down.

We can run ping on the loopback address 127.0.0.1:

root@eaa156967336:/# ping -c 3 127.0.0.1

PING 127.0.0.1 (127.0.0.1) 56(84) bytes of data.

64 bytes from 127.0.0.1: icmp_seq=1 ttl=64 time=0.045 ms

64 bytes from 127.0.0.1: icmp_seq=2 ttl=64 time=0.091 ms

64 bytes from 127.0.0.1: icmp_seq=3 ttl=64 time=0.094 ms

--- 127.0.0.1 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2060ms

rtt min/avg/max/mdev = 0.045/0.076/0.094/0.022 ms

root@eaa156967336:/# ip netns exec foo ping -c 3 127.0.0.1

ping: connect: Network is unreachable

Here we see that in the foo namespace, we are unable to ping ourselves,

because the lo device is down.

root@eaa156967336:/# ip netns exec foo ip link set lo up

root@eaa156967336:/# ip netns exec foo ping -c 3 127.0.0.1

PING 127.0.0.1 (127.0.0.1) 56(84) bytes of data.

64 bytes from 127.0.0.1: icmp_seq=1 ttl=64 time=0.050 ms

64 bytes from 127.0.0.1: icmp_seq=2 ttl=64 time=0.087 ms

64 bytes from 127.0.0.1: icmp_seq=3 ttl=64 time=0.109 ms

--- 127.0.0.1 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2027ms

rtt min/avg/max/mdev = 0.050/0.082/0.109/0.024 ms

Now that lo is up, we can ping over loopback.

3.7.1 Using Namespaces to Create Virtual Hosts

We are going to see how to use network namespaces to create a testbed. This is an emulated network in a controlled environment, which can be an essential part of debugging and testing a network protocol. To do this, we are going to create a separate namespace for every virtual host in our testbed. We will then add links between them, configure routing, and even set link characteristics to control bandwidth, latency, and packet loss.

To start, we want a second namespace, which we will call bar:

root@eaa156967336:/# ip netns add bar

root@eaa156967336:/# ip netns

bar

foo

We will need to bring the lo device up in namespace bar before we

can send any packets. Recall that we have two virtual devices, v0

and v1, connected as a virtual ethernet link. root@eaa156967336:/# ip link show type veth

6: v1@v0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 66:59:d5:2d:62:7b brd ff:ff:ff:ff:ff:ff

7: v0@v1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 16:b6:e1:98:ed:b9 brd ff:ff:ff:ff:ff:ff

16: eth0@if17: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

root@eaa156967336:/# ip netns exec foo ip link show type veth

root@eaa156967336:/# ip netns exec bar ip link show type veth

Our two namespaces have no virtual devices in them. Going back to our physical analogy, we now think of the Raspberry Pi and router as separate namespaces (virtual hosts). The cable is not yet connecting them (Figure 3.3).

We can move devices between namespaces with another option to ip link set:

root@eaa156967336:/# ip link set v0 netns foo

root@eaa156967336:/# ip link set v1 netns bar

root@eaa156967336:/# ip link show type veth

16: eth0@if17: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

root@eaa156967336:/# ip netns exec foo ip link show type veth

7: v0@if6: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 16:b6:e1:98:ed:b9 brd ff:ff:ff:ff:ff:ff link-netns bar

root@eaa156967336:/# ip netns exec bar ip link show type veth

6: v1@if7: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 66:59:d5:2d:62:7b brd ff:ff:ff:ff:ff:ff link-netns foo

Now we see that v0 is no longer in the default namespace, but is

instead in foo, and similarly v1 is in bar. This is sometimes called

“throwing the device over the wall” into the namespace, because you no

longer have access to it from the initial (default) namespace.

In the foo namespace, the link v0 is unconfigured:

root@eaa156967336:/# ip netns exec foo ip addr show v0

7: v0@if6: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 16:b6:e1:98:ed:b9 brd ff:ff:ff:ff:ff:ff link-netns bar

The same is true of v1 in bar. Let’s add addresses to the devices

and bring them up:

root@eaa156967336:/# ip netns exec foo ip addr add 1.2.3.4/32 dev v0

root@eaa156967336:/# ip netns exec bar ip addr add 1.2.3.5/32 dev v1

root@eaa156967336:/# ip netns exec foo ip link set v0 up

root@eaa156967336:/# ip netns exec bar ip link set v1 up

root@eaa156967336:/# ip netns exec foo ip addr show v0

7: v0@if6: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 16:b6:e1:98:ed:b9 brd ff:ff:ff:ff:ff:ff link-netns bar

inet 1.2.3.4/32 scope global v0

valid_lft forever preferred_lft forever

inet6 fe80::14b6:e1ff:fe98:edb9/64 scope link

valid_lft forever preferred_lft forever

root@eaa156967336:/# ip netns exec bar ip addr show v1

6: v1@if7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 66:59:d5:2d:62:7b brd ff:ff:ff:ff:ff:ff link-netns foo

inet 1.2.3.5/32 scope global v1

valid_lft forever preferred_lft forever

inet6 fe80::6459:d5ff:fe2d:627b/64 scope link

valid_lft forever preferred_lft forever

At this point, we once again have the virtual equivalent of Figure 3.2, but now the Pi and router are virtual hosts.

3.7.2 Routing Between Namespaces

At this point, we have devices in foo and bar with addresses

1.2.3.4 and 1.2.3.5, and the devices are up and available for

traffic. What we do not yet have is a way for Linux to know how to

use these devices. We add this by modifying the routing tables for

the namespace.

Since foo and bar are directly connected by a single veth link,

we can do this fairly simply with the ip route add command:

root@eaa156967336:/# ip netns exec foo ip route add 1.2.3.5/32 dev v0 proto static scope global src 1.2.3.4

root@eaa156967336:/# ip netns exec bar ip route add 1.2.3.4/32 dev v1 proto static scope global src 1.2.3.5

root@eaa156967336:/# ip netns exec foo ip route

1.2.3.5 dev v0 proto static src 1.2.3.4

root@eaa156967336:/# ip netns exec bar ip route

1.2.3.4 dev v1 proto static src 1.2.3.5

There is a lot going on here. ip route add takes a CIDR prefix for

the destination, which in this case is just the address of the other

end of the link, the device through which to send traffic to this

destination, some “standard” options, and then the source address to

attach to traffic forwarded through this table entry. After doing this

for both namespaces, we can see that we now have routing tables with

the routes we just created.

The proof of the routing is in the pinging, so let’s give it a try:

root@eaa156967336:/# ip netns exec foo ping -c 3 1.2.3.5

PING 1.2.3.5 (1.2.3.5) 56(84) bytes of data.

64 bytes from 1.2.3.5: icmp_seq=1 ttl=64 time=0.023 ms

64 bytes from 1.2.3.5: icmp_seq=2 ttl=64 time=0.075 ms

64 bytes from 1.2.3.5: icmp_seq=3 ttl=64 time=0.107 ms

--- 1.2.3.5 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2040ms

rtt min/avg/max/mdev = 0.023/0.068/0.107/0.034 ms

We have a working network!

Our network is not terribly useful, though. Let’s add a third namespace,

baz, and connect it to bar. ip netns add baz

ip netns exec baz ip link set lo up

ip link add v2 type veth peer name v3

ip link set v2 netns bar

ip link set v3 netns baz

ip netns exec bar ip addr add 1.2.3.5/32 dev v2

ip netns exec baz ip addr add 1.2.3.6/32 dev v3

ip netns exec bar ip link set v2 up

ip netns exec baz ip link set v3 up

ip netns exec bar ip route add 1.2.3.6/32 dev v2 proto static scope global src 1.2.3.5

ip netns exec baz ip route add 1.2.3.5/32 dev v3 proto static scope global src 1.2.3.6

What does our network now look like? root@eaa156967336:/# ip netns exec foo ip route

1.2.3.5 dev v0 proto static src 1.2.3.4

root@eaa156967336:/# ip netns exec bar ip route

1.2.3.4 dev v1 proto static src 1.2.3.5

1.2.3.6 dev v2 proto static src 1.2.3.5

root@eaa156967336:/# ip netns exec baz ip route

1.2.3.5 dev v3 proto static src 1.2.3.6

What about connectivity? root@eaa156967336:/# ip netns exec foo ping -c 3 1.2.3.5

PING 1.2.3.5 (1.2.3.5) 56(84) bytes of data.

64 bytes from 1.2.3.5: icmp_seq=1 ttl=64 time=0.046 ms

64 bytes from 1.2.3.5: icmp_seq=2 ttl=64 time=0.101 ms

64 bytes from 1.2.3.5: icmp_seq=3 ttl=64 time=0.044 ms

--- 1.2.3.5 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2041ms

rtt min/avg/max/mdev = 0.044/0.063/0.101/0.026 ms

root@eaa156967336:/# ip netns exec foo ping -c 3 1.2.3.6

ping: connect: Network is unreachable

root@eaa156967336:/# ip netns exec bar ping -c 3 1.2.3.4

PING 1.2.3.4 (1.2.3.4) 56(84) bytes of data.

64 bytes from 1.2.3.4: icmp_seq=1 ttl=64 time=0.022 ms

64 bytes from 1.2.3.4: icmp_seq=2 ttl=64 time=0.271 ms

64 bytes from 1.2.3.4: icmp_seq=3 ttl=64 time=0.362 ms

--- 1.2.3.4 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2035ms

rtt min/avg/max/mdev = 0.022/0.218/0.362/0.143 ms

root@eaa156967336:/# ip netns exec bar ping -c 3 1.2.3.6

PING 1.2.3.6 (1.2.3.6) 56(84) bytes of data.

64 bytes from 1.2.3.6: icmp_seq=1 ttl=64 time=0.061 ms

64 bytes from 1.2.3.6: icmp_seq=2 ttl=64 time=0.074 ms

64 bytes from 1.2.3.6: icmp_seq=3 ttl=64 time=0.079 ms

--- 1.2.3.6 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2045ms

rtt min/avg/max/mdev = 0.061/0.071/0.079/0.007 ms

root@eaa156967336:/# ip netns exec baz ping -c 3 1.2.3.4

ping: connect: Network is unreachable

root@eaa156967336:/# ip netns exec baz ping -c 3 1.2.3.5

PING 1.2.3.5 (1.2.3.5) 56(84) bytes of data.

64 bytes from 1.2.3.5: icmp_seq=1 ttl=64 time=0.039 ms

64 bytes from 1.2.3.5: icmp_seq=2 ttl=64 time=0.073 ms

64 bytes from 1.2.3.5: icmp_seq=3 ttl=64 time=0.099 ms

--- 1.2.3.5 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2056ms

rtt min/avg/max/mdev = 0.039/0.070/0.099/0.024 ms

From this, we can see that foo and bar can ping each other, as can

bar and baz. foo and baz, however, cannot.

If we look at our routing tables again, we can see at least part of

what the problem is: foo has no forwarding rule that applies to the

address 1.2.3.6, and baz has no forwarding rule that applies to

the address 1.2.3.4. We can add these, and see what changes:

ip netns exec foo ip route add 1.2.3.0/24 dev v0 proto static scope global src 1.2.3.4

ip netns exec baz ip route add 1.2.3.0/24 dev v3 proto static scope global src 1.2.3.6

Now we examine our routing tables and try to ping:

root@eaa156967336:/# ip netns exec foo ip route

1.2.3.0/24 dev v0 proto static src 1.2.3.4

1.2.3.5 dev v0 proto static src 1.2.3.4

root@eaa156967336:/# ip netns exec baz ip route

1.2.3.0/24 dev v3 proto static src 1.2.3.6

1.2.3.5 dev v3 proto static src 1.2.3.6

root@eaa156967336:/# ip netns exec foo ping -c 3 1.2.3.6

PING 1.2.3.6 (1.2.3.6) 56(84) bytes of data.

From 1.2.3.4 icmp_seq=1 Destination Host Unreachable

From 1.2.3.4 icmp_seq=2 Destination Host Unreachable

From 1.2.3.4 icmp_seq=3 Destination Host Unreachable

--- 1.2.3.6 ping statistics ---

3 packets transmitted, 0 received, +3 errors, 100% packet loss, time 2085ms

pipe 3

We still cannot connect foo and baz, but why not? When bar

receives a packet through device v1 with a destination of 1.2.3.6,

it does not actually know that it is supposed to forward it

further. That means it just ignores the packet, and it goes no

further. Instead, we need bar to act as a bridge between devices

v1 and v2. Without bridging, we would have to have point-to-point

links between all hosts in our network (called a mesh network). This

will work, but it becomes unmanageable, and is not appropriate for all

testing situations. We will look at adding bridges, but first we are

going to take a look at something useful even in small mesh networks.

3.7.3 Network Emulation

One of the additional programs in iproute2 is tc, which implements

traffic control. There is a lot you can do with this, but we are going

to limit ourselves to simple queueing discplines (qdisc). We can add

a queueing discipline to a device with

ip netns exec foo tc qdisc add dev v0 root handle 1:0 netem

This adds a new qdisc to foo’s v0 device, and configures it for

network emulation (netem). We can then update the qdisc to emulate

real network behaviors:

ip netns exec foo tc qdisc change dev v0 root netem delay 10ms rate 1Mbit loss random 30

Now the v0 device in the foo namespace will send traffic with a

latency of 10ms, a bandwidth of 1Mbps, and will randomly drop 30% of

the packets. This is one-way, so if we ping bar:

root@eaa156967336:/# ip netns exec foo ping -c 5 1.2.3.5

PING 1.2.3.5 (1.2.3.5) 56(84) bytes of data.

64 bytes from 1.2.3.5: icmp_seq=2 ttl=64 time=11.4 ms

64 bytes from 1.2.3.5: icmp_seq=3 ttl=64 time=13.5 ms

64 bytes from 1.2.3.5: icmp_seq=5 ttl=64 time=14.2 ms

--- 1.2.3.5 ping statistics ---

5 packets transmitted, 3 received, 40% packet loss, time 4086ms

rtt min/avg/max/mdev = 11.365/13.014/14.210/1.204 ms

We see RTTs slightly over 10ms because of the bandwidth limit. If we lower

the bandwidth to 100kbps, this becomes more pronounced:

root@eaa156967336:/# ip netns exec foo tc qdisc change dev v0 root netem delay 10ms rate 100kbit loss random 30

root@eaa156967336:/# ip netns exec foo ping -c 5 1.2.3.5

PING 1.2.3.5 (1.2.3.5) 56(84) bytes of data.

64 bytes from 1.2.3.5: icmp_seq=2 ttl=64 time=18.2 ms

64 bytes from 1.2.3.5: icmp_seq=3 ttl=64 time=18.4 ms

64 bytes from 1.2.3.5: icmp_seq=4 ttl=64 time=18.4 ms

64 bytes from 1.2.3.5: icmp_seq=5 ttl=64 time=18.0 ms

--- 1.2.3.5 ping statistics ---

5 packets transmitted, 4 received, 20% packet loss, time 4147ms

rtt min/avg/max/mdev = 18.002/18.249/18.442/0.167 ms

We are not seeing precisely 30% packet loss, because of the small sample size. If we increase this:

root@eaa156967336:/# ip netns exec foo ping -q -c 20 1.2.3.5

PING 1.2.3.5 (1.2.3.5) 56(84) bytes of data.

--- 1.2.3.5 ping statistics ---

20 packets transmitted, 14 received, 30% packet loss, time 19757ms

rtt min/avg/max/mdev = 18.259/19.568/22.042/1.315 ms

The -q flag makes ping “quiet”, printing only the summary at the end.

3.7.4 Adding Bridge Nodes

At this point, we can create mesh networks with realistic network link

emulation. We still do not have actual packet forwarding, however, so

now it is time to revisit bridging. Within the Linux kernel, a bridge

device allows externally facing devices, like our veth endpoints, to

interact through the routing table.1

The old way to create a bridge was with the brctl command. Here are

the steps to do this:

ip netns exec bar brctl addbr br0

ip netns exec bar ip addr add dev br0 local 1.2.3.5

ip netns exec bar ip link set dev br0 up

ip netns exec bar brctl addif br0 v1

ip netns exec bar brctl addif br0 v2

After executing these commands, we can communicate between foo and baz!

root@eaa156967336:/# ip netns exec foo ping -c 3 1.2.3.6

PING 1.2.3.6 (1.2.3.6) 56(84) bytes of data.

64 bytes from 1.2.3.6: icmp_seq=1 ttl=64 time=33.6 ms

64 bytes from 1.2.3.6: icmp_seq=2 ttl=64 time=19.3 ms

--- 1.2.3.6 ping statistics ---

3 packets transmitted, 2 received, 33.3333% packet loss, time 2009ms

rtt min/avg/max/mdev = 19.326/26.444/33.563/7.118 ms

The iproute2 package has a bridge command that replaces brctl, and

has additional functionality. We actually do not even need this to

create a simple bridge, as the ip command can do the basic

configuration”

ip netns exec bar ip link add name br0 type bridge

ip netns exec bar ip link set dev br0 up

ip netns exec bar ip link set dev v1 master br0

ip netns exec bar ip link set dev v2 master br0

This begins by creating a new device (br0) of type bridge. Once we

have brought the device up, we can set our virtual devices v1 and

v2 as subordinate to br0 with the master property. We can see

the bridge configuration with:

root@eaa156967336:/# ip netns exec bar bridge link show

6: v1@if7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 master br0 state forwarding priority 32 cost 2

9: v2@if8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 master br0 state forwarding priority 32 cost 2

Now we can create an arbitrary2 number of virtual hosts, with realistic forwarding tables and link characteristics. This allows us to test protocols in a wide variety of network configurations, including those that involve network failures or attacks.

Chapter 4 Interactions Between Layers

We have mostly looked at the OSI model’s layers independently, but when was the last time you typed an IP address into your web browser, not to mention a sequence of MAC addresses? Instead, we present a reasonable name at an appropriate layer, and rely on additional network services to convert this to the address used by the layer below or above.

4.1 Domain Name System

Let’s start with the one you are likely to see many times every day. This book is currently hosted on a server with IP address

128.8.127.4, but you almost certainly did not provide this address

directly to your browser. Instead, you would have entered (or followed

a link to) www.cs.umd.edu. This human-friendly name is not routable

by the network, so we need to get the associated IP address. How does

this work?

The Domain Name System (DNS) is a globally distributed database. Its primary (but not only) function is to provide mappings between

fully qualified domain names (FQDNs, such as www.cs.umd.edu) and

IP addresses (such as 128.8.127.4). Domain names are organized

hierarchically, beginning with the top-level domains (TLDs), and

delegating to registries. The TLDs are defined and managed by the

Internet Corporation for Assigned Names and Numbers (ICANN), as are

the country-code domains (such as .us).

Most applications that take FQDNs (ie, hostnames) perform DNS

lookups automatically. You can do this from the command line, as well,

which is often useful. Some systems still use an old program called

nslookup for this, but that has largely been deprecated in favor of

the programs host and dig.

Most of the time, host is the program you want to use. Given a FQDN,

it will tell you the IP address or addresses associated with it:

✔ 12:58:45 389Z $ host www.cs.umd.edu

www.cs.umd.edu is an alias for www-hlb.cs.umd.edu.

www-hlb.cs.umd.edu has address 128.8.127.4

In this case, the FQDN www.cs.umd.edu is an alias for another FQDN,

www-hlb.cs.umd.edu, so host retrieves the address record for this

as well. You can also perform reverse lookups, where you ask for

the FQDN matching an IP address:

✔ 13:00:47 389Z $ host 128.8.127.4

4.127.8.128.in-addr.arpa domain name pointer www-hlb.cs.umd.edu.

Here we actually perform a special DNS lookup in the in-addr.arpa

domain, with the order of the quads reversed. The reason for this is

that FQDNs begin with the host-specific part of the name and end with

the top-level domain, but IP addresses begin with the largest network

segment and end with the host-specific part of the address. Not all IP

addresses will have a reverse entry.

When you want to resolve a FQDN, you first contact your local resolver, also called a DNS server. The resolver should be set for you automatically when you join the network if you are using DHCP (Dynamic Host Configuration Protocol), which you almost always are. This local resolver maintains local information and a cache of retrieved DNS database records, and will recursively contact other resolvers for you if it does not know the requested information.

We are using “FQDN” rather than “hostname” deliberately here. While a

FQDN is a hostname, a hostname might not be a FQDN. In particular, a

host on your local network could be known simply by its short

hostname, such as www (on the CS department’s network):

✔ 13:00:51 389Z $ host www

www.cs.umd.edu is an alias for www-hlb.cs.umd.edu.

In this case, the local host appends its known domain to the requested hostname to obtain the FQDN for the DNS request.

There is another program called dig that does substantially the same

thing. The main difference is in the information displayed:

✔ 13:43:15 389Z $ dig www.cs.umd.edu

; <<>> DiG 9.10.6 <<>> www.cs.umd.edu

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 12444

;; flags: qr rd ra; QUERY: 1, ANSWER: 2, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 512

;; QUESTION SECTION:

;www.cs.umd.edu. IN A

;; ANSWER SECTION:

www.cs.umd.edu. 11373 IN CNAME www-hlb.cs.umd.edu.

www-hlb.cs.umd.edu. 83373 IN A 128.8.127.4

;; Query time: 32 msec

;; SERVER: 10.72.14.49#53(10.72.14.49)

;; WHEN: Tue Nov 08 13:48:48 EST 2022

;; MSG SIZE rcvd: 81

We have been given a lot more information here, including all of the

options that were included in the request and a detailed breakdown of

the response. Comparing what we saw in the output from host, an

alias is resolved through a CNAME (“Canonical Name”) DNS record,

while an address is resolved through an A (“Address”) record. The

number that appears after the FQDN (which technically ends in a dot,

though you would not provide that as part of a hostname) is the time

to live for this record. Unlike the TTL of an IP packet, this TTL is

measured in seconds, and is how long the record may be cached before

it expires and should be retrieved again. In this case,

www.cs.umd.edu should be checked again in a little over 3 hours to

make sure it is still an alias for www-hlb.cs.umd.edu, which itself

should be checked in almost 14 hours to see if its IP address has

changed.

4.2 Network Blocks

As with the DNS hierarchy, the IP address space is also managed as a hierarchy. The largest network blocks (netblocks) are assigned by the Internet Assigned Numbers Authority (IANA), and those blocks are then subdivided by their respective owners. Some of this is publicly available, though as networks are divided into ever-smaller subnets, this information becomes purely internal.

We can learn a good deal about both domains and netblocks with the

whois program, which queries yet another distributed database. Here

is an example of a domain registration lookup:

✔ 14:17:29 389Z $ whois umd.edu

% IANA WHOIS server

% for more information on IANA, visit http://www.iana.org

% This query returned 1 object

refer: whois.educause.edu

domain: EDU

organisation: EDUCAUSE

address: 282 Century Place, Suite 5000

address: Louisville, CO 80027

address: United States

contact: administrative

name: Information Services Administration

organisation: EDUCAUSE

address: 4772 Walnut Street, Suite 206

address: Boulder Colorado 80301

address: United States

phone: +1-303-449-4430

fax-no: +1-303-440-0461

e-mail: netadmin@educause.edu

contact: technical

name: Registry Customer Service

organisation: VeriSign Global Registry

address: 12061 Bluemont Way

address: Reston Virginia 20190

address: United States

phone: +1-703-925-6999

fax-no: +1-703-948-3978

e-mail: info@verisign-grs.com

nserver: A.EDU-SERVERS.NET 192.5.6.30 2001:503:a83e:0:0:0:2:30