Rendering Crystal Glass Caustics

Author: Alisa Chen

Advisor: Dr. David M. Mount

CMSC 498A, Spring 2011

Contents

How POV-Ray Handles Photon Mapping

Overview

Introduction

While glassmaking and glass arts have been known since ancient times, it was not until George Ravenscroft added lead oxide that lead glass was invented, originally called glass-of-lead. Due to being a "perticuler sort of Christaline Glass resembling rock Christal", it is also referred to as lead crystal or crystal glass. [1]

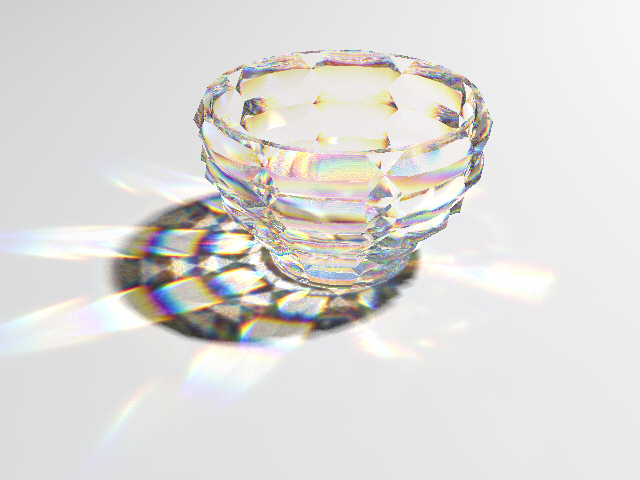

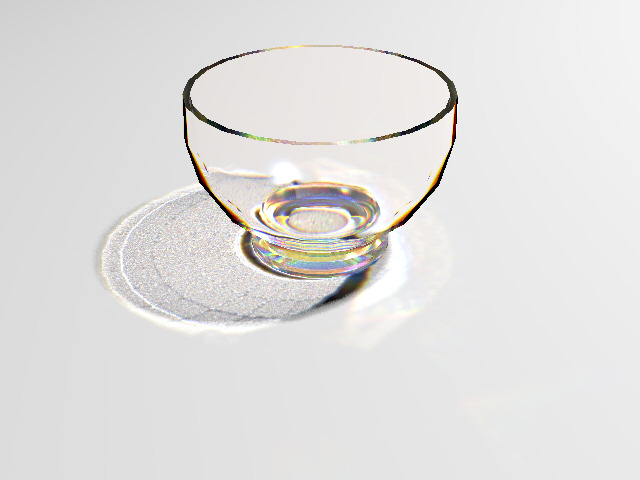

The attractiveness of crystal glass is due to its higher index of refraction and its dispersion of light. This results in a distortion of reflections of its surroundings and a creation of rainbows. Of particular interest to this study are caustics, the beautiful patterns the light formed due to the curved surface of crystal. Chromatic dispersion is included among these patterns.

To render crystal glass caustics, this independent study researched photon mapping to enhance a basic ray tracer. The Persistence of Vision Raytracer (POV-Ray) 3.6 was found to be sufficient to render these images. There are two enhancements to better handle caustics: distributed ray tracing and photon mapping. POV-Ray implements both.

Definitions

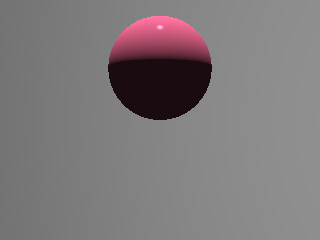

Shading with the Phong Model (Ambient/Diffuse/Specular)

ambient: in ray tracing and shading with the Phong model, ambient light is a modeling heuristic to soften harsh shadows with a uniformly distributed light [2]; in photon mapping, ambient light is modeled along with other forms of indirect lighting

caustic: light reflected or transmitted via one specular surface before hitting a diffuse surface [3]

diffuse: light reflected from a surface and scattered in all directions from that side [4]

dispersion: the bending of different wavelengths of light at different angles; e.g., the chromatic dispersion of white light by a prism

global illumination: illumination from both direct and indirect lighting; as Jensen explains, this includes caustics, diffuse interreflections, and participating media [5]

index of refraction: the ratio of the speed of light in a vacuum to the speed of light through a medium, which determines the degree of bending of light as it passes through the medium; e.g., for comparison, ordinary glass has an index of refraction of 1.5 while crystal glass has a greater index of refraction of 1.7 [6]

specular: light reflected from a surface for which the angle of the reflected light ray is close or equal to the angle of the incoming ray; specular reflected light that reaches the eye show as highlights on a surface, usually in the color of the light source [7]

photon: a quantum (smallest possible independent unit) of light; in photon mapping it is modeled by a position, a direction of propagation, and a color [8]

Photon Mapping

Jensen introduced photon mapping as an extension of ray tracing, creating a two-pass global illumination method. The first pass builds the photon map by emitting photons from the light sources into the scene until they hit non-specular objects. It stores these photons in a data structure, called a photon map. The second pass, called the rendering pass, uses the photon map to extract information about the light emitted and/or the color at each rendered point in the scene. [9]

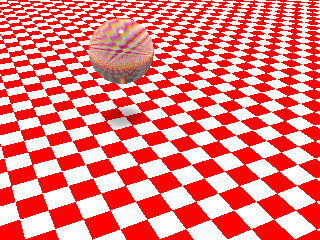

Ray Tracing

A ray tracer is a rendering method used in many graphics software systems. It simulates how rays of light pass through a scene, acting as an eye or camera into a virtually modeled world. It translates the three-dimensional model onto a two-dimensional plane of pixels, rendering a mathematical and literal image.

For each pixel, a ray-tracing algorithm traces rays from the eye to a light source in order to find the perceived color of the pixel. [10] Ray tracing is also done in the opposite direction, where rays are traced from the light source to the eye. Only rays that enter the eye determine what is seen or rendered, and the vast majority of rays emitted from light sources never reach the eye. For this reason, the former is usually employed instead of the latter.

There is also a variant, called bidirectional ray tracing, where rays are traced in both directions.

Shirley and Marschner, authors of Fundaments of Computer

Graphics, and Hill, author of Computer Graphics Using OpenGL, give

the basic ray-tracing algorithm as follows [11]:

void ray_tracer:

for each pixel (i, j) do

compute viewing ray from eye through pixel location on image plane

if (ray_hits_an_object) then

set pixel_color to Phong_color

else

set pixel_color to background color

boolean ray_hits_an_object:

hit = false

for each object o in scene do

if (o is hit at ray parameter t and t is within the range [t0, t1]) then

hit = true

hit_object = o

t1 = t

return hit

color Phong_color:

set color to emissive color of object (if it radiates light)

add to color the ambient, diffuse, and specular components

add to color the reflected and refracted components

return color

Distributed Ray Tracing

Basic ray tracers are best for rendering discrete forms, as opposed to indiscrete forms or gradations of color. Thus shadows, reflected images, and refracted images end up with hard edges. The ray tracer needs more rays directed to the edges of shadows (and caustics) to render them with a more realistic soft edge. Instead of a single ray sample for each pixel, it oversamples with multiple rays for a pixel. The following is the basic algorithm for distributed ray tracing. For a given parameter n, it generates n2 rays for each pixel [12]:

void distributed_ray_tracer:

for each pixel (i, j) do

set color to 0

for p = 0 to n - 1 do

for q = 0 to n - 1 do

add to color the ray_color at location (i + (p + 0.5)/n, j + (p + 0.5)/n)

set pixel_color to color/n2

color ray_color:

compute viewing ray from eye through location on image plane

if (ray_hits_an_object) then

set color to Phong_color

else

set color to background color

Due to the regular nature of the grid, this algorithm may

lead to unwanted artifacts, such as moire patterns. To eliminate these

patterns, a technique known as jittering is often employed, which randomly

perturbs the rays. [13]

void jittered_ray_tracer:

for each pixel (i, j) do

set color to 0

for p = 0 to n - 1 do

for q = 0 to n - 1 do

set ξ to a random number in the range [0, 1>

add to color the ray_color at location (i + (p + ξ)/n, j + (p + ξ)/n)

set pixel_color to color/n2

Distributed ray tracing may be further optimized with photon mapping, directing oversampling only where needed at the edges of forms. [14]

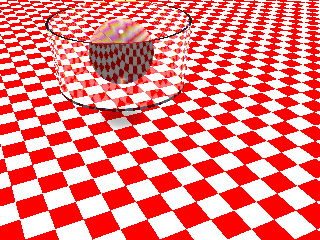

Photon Mapping

Photon mapping gives information on indirect lighting in the scene, allowing for more physically realistic renderings compared to simple local heuristics such as the ambient component of the Phong shading model. The first pass of the photon mapping method builds the photon map, and the second pass renders the scene using the photon map. The first pass uses photon tracing, which is similar to ray tracing. The second pass uses ray tracing optimized by the photon map. Jensen's algorithm assumes bidirectional ray tracing is used because only light traced from the light source to the eye has the information needed for caustic modeling.

Tracing Pass

In the first pass, photons are emitted from all the light sources. Jensen recommends shooting photons with the same amount of power in order to avoid wasting computation time on photons that contribute less to the scene. In other words, the algorithm shoots more photons from brighter light sources instead of shooting the same number of photons as a dimmer light source and increasing the power of the photons. The angle that a photon is shot at is probabilistically determined, based on the shape of the light source.

Each photon is traced through the scene with photon tracing. This is performed in a manner similar to ray tracing, except that photons behave differently from rays. When a ray hits an object, it may be reflected or refracted, usually terminating after hitting a diffuse object. When a photon hits an object, it may be reflected, transmitted, or absorbed. In Jensen's algorithm, the interaction of each hit is determined by a probabilistic method, called Russian Roulette, where the probabilities of the various interactions are dependent on the properties of the object. Averaging several photon samples gives the correct distribution of power between the three interactions. Jensen also explains how to compute the probabilities for different reflection coefficients between multiple color channels. [15]

Photons are stored wherever they hit non-specular surfaces. Each photon can be stored several times along its path, recording the position, incoming photon power, and incoming direction for each photon-surface interaction. If the photon is absorbed by a diffuse surface, then the information about this photon is stored at the surface. [16]

Jensen explains it is more efficient to separate and store photons in three different maps as opposed to one map, based on their interactions. The global photon map gives an approximate representation of the global illumination for all diffuse surfaces. This includes photons that hit other surfaces (diffuse, specular, or participating media) first. The volume photon map stores photons that have at least one interaction before going through a participating medium or volume. The caustic photon map stores photons that have passed through at least one specular reflection before hitting a diffuse surface. It is constructed in a separate pass, using initial shooting angles for photons that direct them towards specular objects. [17]

The following is the general algorithm for photon tracing:

void photon_tracer:

determine distribution of photons between light sources

for each light do

for each photon from light do

continue = true

set photon_color to light_color

< set photon_angle to angle based on distribution from light

while (continue = true and photon in range of rendered scene) do

if (photon_hits an_object) then

store photon information in photon map

determine interaction (reflect, transmit, absorb) by Russian Roulette based on object properties

if (photon is absorbed or photon hits second diffuse surface) then

continue = false

else

set photon_angle based on interaction and surface properties

boolean photon_hits_an_object:

hit = false

for each object o in scene do

if (o is hit at photon parameter t and t is within the range [t0, t1]) then

hit = true

hit_object = o

t1 = t

return hit

Jensen recommends using a balanced kd-tree as the structure for the photon maps, as searching for a photon in the tree takes Ο(log n) in the worst case, where n is the number of photons. [18] (See a kd-tree demo by UMIACS here.)

Rendering Pass

In the second pass of Jensen's algorithm, the final image is rendered using distribution ray tracing in which the pixel color (radiance) is computed by averaging a number of sample estimates. [19]

The photon map is view-independent, so the same map can be used to render a scene from different viewpoints. An important feature of the photon map method is the ability to compute color estimates at any non-specular surface point in any given direction by using the nearest photons in the photon map. For caustic lighting effects, this estimate is filtered in order to preserve the sharp edges of caustics. [20]

Excluding participating media, lighting can be separated into four types: direct, specular, caustics, and multiple diffuse reflections. In general, the global photon map gives an approximation and concentrates ray samples. Direct illumination needs to be computed accurately through the use of ray tracing because the eye is highly sensitive to direct lighting effects, e.g., shadow edges. To check the contribution of each light source for a pixel, the algorithm can send out shadow rays or shadow photons from the point of interest towards the light source to determine whether the light is blocked. If it is not blocked, then the contribution from the light source is included in the calculation. As this method of checking becomes costly for large area light sources, which create soft shadows, there is also an optimization using shadow photons. At the point a photon hits a surface, a shadow photon is emitted at the incoming angle of the original, going through the object. The next object it hits results in the shadow photon being stored in the photon map. [21]

For multiple diffuse reflections (e.g., color bleeding), rays are also directed by photon concentrations in the global photon map. Specular reflections are not stored in the photon maps because they are more accurately rendered with ray tracing samples. Caustics are more accurately rendered using information from the caustic photon map. [22]

Our scenes did not require the third photon map for volumes or participating media. More information on extending photon mapping for participating media can be found in Jensen's book.

How POV-Ray Handles Photon Mapping

Written in C++, POV-Ray 3.6 stores photons in a kd-tree, which is an abstraction of an array of pointers to photon blocks that are of fixed size of a power of two. [23]

For the most part, POV-Ray follows Jensen's method. It uses three photon maps, called photonMap (for caustics), globalPhotonMap, and mediaPhotonMap.

There is additional code for handling POV-Ray's manner of describing objects with multiple layers of textures. Color is an average of all the layers, weighted by the opacity of the layer. Refraction is dependent on the remaining transparency after all the layers are added. Diffusion and reflection are taken only from the top layer. [24]

For the global photon map, the program determines whether the photon is reflected, refracted, diffused, or absorbed. Interestingly, for the caustic photon map, the photon is always both reflected and refracted. This deviates from Jensen's algorithm, which relies on an adequate number of samples to average and render the correct behavior. [25]

Troubleshooting POV-Ray

When photons are being used in POV-Ray, soft shadows only appear if there are one or more area lights. The area_light property must be included in the light description for POV-Ray's photon mapping to recognize it as an area light and emit photons accordingly.

Undesired Effect Due to Difference

An undesired effect occurs when a bowl is modeled through difference of two shapes, leading to incorrectly traced reflections on a specular surface inside the bowl. Instead, each bowl was modeled as a union of mesh2's; mesh2 is faster to parse than the standard mesh because it is closer to POV-Ray's internal mesh representation. [26]

Simple Glass Bowl

The bowls were created in Google SketchUp 7 and exported to POV-Ray with the SU2POV 3.5 plugin by Didier Bur.

Sources

[1] Edwards, Geoffrey, and Garry Sommerfeld. Art of Glass: Glass in the Collection of the National Gallery of Victoria. Melbourne: National Gallery of Victoria, 1998. Electronic http://www.google.com/books?hl=zh-EN&lr=&id=laWX3BnLENgC. 101.

[2] Hill, F.S. Computer Graphics Using OpenGL. S.l.: Pearson Education, 2001. Print. 419-20; Shirley, P, and Stephen R. Marschner. Fundamentals of Computer Graphics. Natick, MA: A K Peters, 2009. Print. 84.

[3] Jensen, Henrik W. Realistic Image Synthesis Using Photon Mapping. Natick, MA: A.K. Peters, Ltd, 2009. Print. 91.

[4] Hill, F.S. Computer Graphics Using OpenGL. 413-16; Shirley, P, and Stephen R. Marschner. Fundamentals of Computer Graphics. 82-83.

[5] Shirley, P, and Stephen R. Marschner. Fundamentals of Computer Graphics. 623; Jensen, Henrik W. A practical guide to global illumination using ray tracing and photon mapping. In ACM SIGGRAPH 2004 Course Notes (SIGGRAPH '04). ACM, New York, NY, USA, Article 20. DOI=10.1145/1103900.1103920 Electronic http://doi.acm.org/10.1145/1103900.1103920. 8.

[6] Ionascu, Sergiu. "I.O.R.(index of Refraction) Settings." Ionascu.org. 24 Mar. 2011. Web. May 2011. Web http://www.ionascu.org/i-o-r-index-of-refraction-settings/; "POV-Ray: Documentation: 2.6.1.4 Refraction." POV-Ray. Persistence of Vision Raytracer Pty. Ltd, 2008. Web. May 2011. Web http://www.povray.org/documentation/view/3.6.0/414/.

[7] Hill, F.S. Computer Graphics Using OpenGL. 413, 416-17; Shirley, P, and Stephen R. Marschner. Fundamentals of Computer Graphics. 82-83.

[8] Shirley, P, and Stephen R. Marschner. Fundamentals of Computer Graphics. 518.

[9] Jensen,

Henrik W. A practical guide to global illumination using ray tracing and photon

mapping. 8; Jensen, Henrik W. Realistic

Image Synthesis Using Photon Mapping; Shirley, P, and Stephen R. Marschner.

Fundamentals of Computer Graphics. 520.

[10] Shirley, P, and Stephen R. Marschner. Fundamentals of Computer Graphics. 69.

[11] Hill, F.S. Computer Graphics Using OpenGL. 744-45; Shirley, P, and Stephen R. Marschner. Fundamentals of Computer Graphics. 71-85.

[12] Shirley, P, and Stephen R. Marschner. Fundamentals of Computer Graphics. 309-10.

[13] Shirley, P, and Stephen R. Marschner. Fundamentals of Computer Graphics. 311.

[14] Shirley, P, and Stephen R. Marschner. Fundamentals of Computer Graphics. 311.

[15] Jensen, Henrik W. A practical guide to global illumination using ray tracing and photon mapping. 39-40.

[16] Jensen, Henrik W. A practical guide to global illumination using ray tracing and photon mapping. 18-19.

[17] Jensen, Henrik W. A practical guide to global illumination using ray tracing and photon mapping. 22.

[18] Jensen, Henrik W. A practical guide to global illumination using ray tracing and photon mapping. 23.

[19] Jensen, Henrik W. A practical guide to global illumination using ray tracing and photon mapping. 8.

[20] Jensen, Henrik W. A practical guide to global illumination using ray tracing and photon mapping. 28, 33.

[21] Jensen,

Henrik W. A practical guide to global illumination using ray tracing and photon

mapping. 35-36; Jensen, Henrik W. Realistic

Image Synthesis Using Photon Mapping.

[22] Jensen, Henrik W. A practical guide to global illumination using ray tracing and photon mapping. 39-39.

[23] POV-Ray. POV-Ray 3.62 Windows Source: "photon.cpp". Persistence of Vision Raytracer Pty. Ltd, 2008. C++ file.

[24] POV-Ray. POV-Ray 3.62 Windows Source: "lighting.cpp". Persistence of Vision Raytracer Pty. Ltd, 2008. C++ file.

[25] POV-Ray. POV-Ray 3.62 Windows Source: "lighting.cpp".

[26] "POV-Ray: Documentation: 1.3.2.2 Mesh2 Object." POV-Ray. Persistence of Vision Raytracer Pty. Ltd, 2008. Web. May 2011. Web http://www.povray.org/documentation/view/3.6.1/68/.